Hi

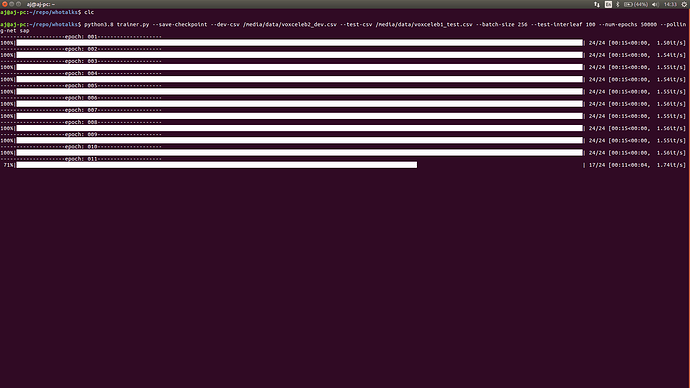

I train a speaker recognition model using ResNet34 model (with little modifications). The code is available at github. At the beginning epochs, each epoch takes about 15 seconds:

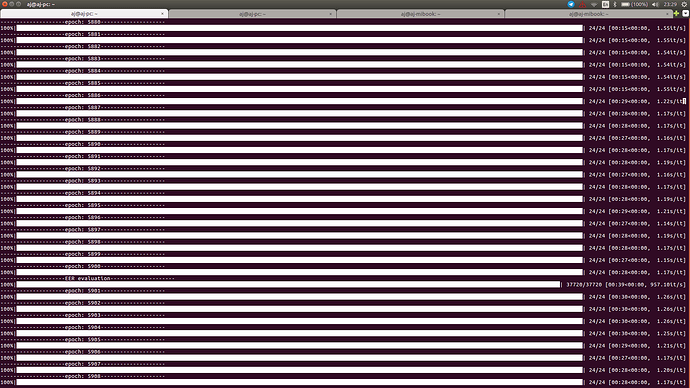

After a while, time to complete an epoch becomes about 30 seconds:

I really can’t understand what is going wrong! There are huge amount of free ram and the GPU memory footprint does not change during this transition.

P.S. I saw this behavior after adding learning rate scheduler. I’m not sure this may relates to scheduler or not.

Another weird behavior is that killing and running the process (even after rebooting the system!) does not reset the epoch time to 15 seconds! The only solution I found effective is to power off and on my system!

Any help is appreciated.

regards

Could you check the temperature and clocks of your system?

Does the performance recover after you kill the process and wait for some time?

Maybe your system is overheating and thus reducing the clocks.

Thanks a lot ptrblk. I eliminate learning rate scheduler and the problem goes away! So I did not reproduced the situation to look for overheating issue. I’m wondering if I do something wrong with learning rate scheduler?

Currently I replaced scheduler.step() with:

if (epoch + 1) % 5000 == 0:

for g in optimizer.param_groups:

g['lr'] = g['lr'] * 0.75

and I’m waiting to see whether the problem comes in or not, but tests are a little time consuming…

Let me know, if you can deterministically reproduce the issue using the scheduler and avoid it by removing the scheduler, please.

Hi @ptrblck

I confirm that using scheduler will reproduce the issue (after about 8 hours of training), and removing scheduler will avoid the issue. Currently I’m running the model without the scheduler for 48 hours and I don’t observe such problem.

Here it is all scheduler related lines of my code:

# optimizer

optimizer = torch.optim.Adam(

[

{'params': model.parameters(), 'lr': args.lr},

{'params': criterion.parameters(), 'lr': args.criterion_lr}

]

)

# lr schedule

scheduler = torch.optim.lr_scheduler.StepLR(

optimizer,

step_size=args.step_size,

gamma=args.gamma

)

for epoch in range(scheduler.last_epoch, args.num_epochs):

for x, target in tqdm(dataloader):

x = x.to(device)

target = target.to(device)

y = model(x)

scores, loss = criterion(y, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

scheduler.step()

lr = optimizer.state_dict()['param_groups'][0]['lr']

log.add_scalar('train-lr', lr, epoch + 1)

Could you please inform me if I used scheduler in a wrong way?!

regards

That’s really weird.

Is the linked code executable or would I need to get some input shapes or other arguments to run it?

train.sh provides minimalist arguments needed to run the code, after downloading and converting audio formats. You could eliminate the data loader stuff and feed the model with a NxCxHxT shaped tensor, where: N=batch size (256), C=channels (1), H=num filter banks (40 lmfb/mfsc) and T=time steps (200).

I also see that other repos (like clovai baseline for SR, or deepspeech.pyotrch for ASR) avoid using schedulers. I’m not sure if they have the same experience or not?!

It’s the first time I see this issue, so I’ll try to run the code for some time and report back.

Please ping me in the next two days, if I haven’t posted an update.

sure.

Thanks for your reply and patience.

Hey @ptrblck

Do you succeed to reproduce the issue? I have some free time and I could spend some time to explore the issue. I wonder to write a test to reproduce the issue if it helps?

A minimal code snippet would be great, as I have trouble getting the code to run.

After removing the data loader, I get e.g. this error:

AttributeError: 'Namespace' object has no attribute 'num_spkr'

and train.sh doesn’t seem to specify this argument.

The trunk network train with classification method, and num_spkr defines the number of the classes. Add:

args.num_spkr = 5994

will resolve that error. But it’s not straightforward process to run the code without downloading and pre processing the data, so I’ll write a snippet and inform you soon.

1 Like

The issue is irrelevant to the pytorch scheduler and the coincidence of occurring the issue with a hardware malfunction, make me mistake.

Indeed, the issue was occurred due to underpowered psu!

Thanks for the follow-up! That’s an unfortunate coincidence, but at least you’ve narrowed it down.

hello Mohammad,

I think I have same issue, I think you resolved it, can you just tell what is the cause?

also what do you mean by underpower of PSU, how we recognize that since ti the set seems working good,

thanks