Hi,

Does training time increase linearly with the number of samples available in the training?

In the first training, I have 400 samples.

In the second training, I have 800 samples.

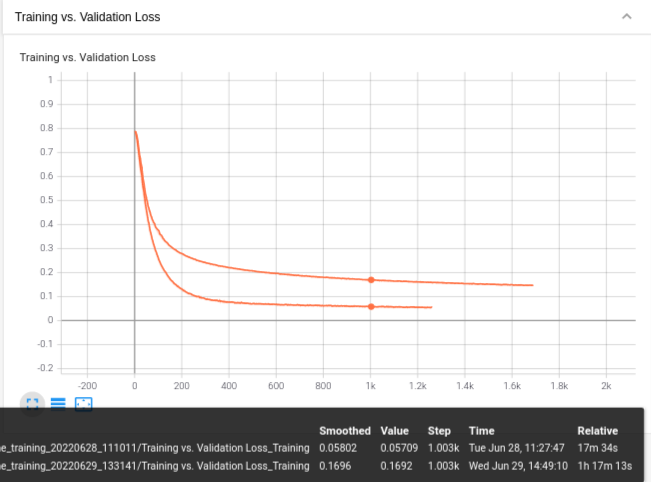

I am outputting the epoch loss. However, when I double the dataset, training time increases 4 times more. I am confused. Should not I expect that time will be doubled as well? All other parameters i.e., batch size is the same.

Thank you ![]()