I was wondering if anyone tried training on popular datasets (imagenet,cifar-10/100) with half precision, and with popular models (e.g, resnet variants)?

It works, but you want to make sure that the BatchNormalization layers use float32 for accumulation or you will have convergence issues. You can do that by something like:

model.half() # convert to half precision

for layer in model.modules():

if isinstance(layer, nn.BatchNorm2d):

layer.float()

Then make sure your input is in half precision.

Christian Sarofeen from NVIDIA ported the ImageNet training example to use FP16 here:

We’d like to clean-up the FP16 support to make it more accessible, but the above should be enough to get you started.

This is great! Is there documentation on when/where half precision can be used? For example, it doesn’t seem like half precision computation is supported on CPU, but I only discovered this by giving it a shot.

@colesbury, could you suggest the right way for conversion of fp16 inputs to BatchNorm with fp32 parameters? I think, this modification is now mentioned in NVIDIA documentation as special batch normalization layer, but I couldn’t find any implementation example.

Hi @colesbury,

I converted my batch-norm layers back to floats with this code, which works:

def batchnorm_to_fp32(module):

if isinstance(module, nn.modules.batchnorm._BatchNorm):

module.float()

for child in module.children():

batchnorm_to_fp32(child)

return module

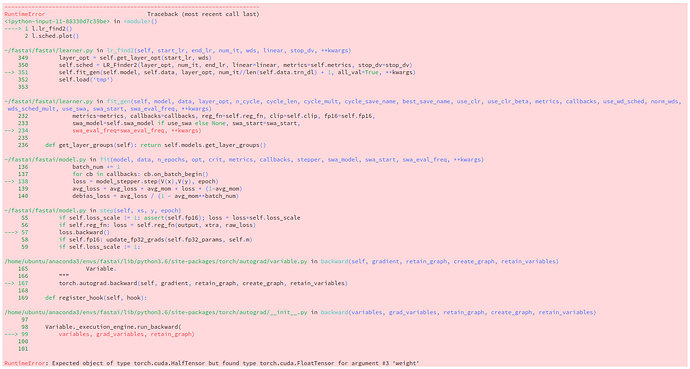

But autograd doesn’t like me using a mixture of float & half tensors, running loss.backward() leads to an error like this:

Do you think you can help me?

Thank you if yes! ![]()

Is it the same situation with weight norm? It should use float32?

What speed gain do you achieve using FP16 instead of FP32 on pytorch (on resnet or simular)?

It really depends. On a Titan X or P100, you get about 15% speedup for all the architectures I’ve tried. On a Titan V or V100, I get about a 50% speedup for resnet50 and 2x speedup on Xception, probably because of the way tensor cores work. The 2x speedup actually makes the Titan V worth it if you are going to be training a lot of networks that use grouped convolution. You also get to use double the batch size because the smaller floats all fit in vram.

I should say that the speed-up isn’t painless. I’ve had issues with fp16 overflow at times. Usually these are fixable, but you only find out about them after investing a significant amount of time training.

I also worry about the added complexity leading me to make wrong conclusions about my experimental outcomes. (i.e. could this issue be an fp16 issue? Or thinking one model works better but really the other model was faulty.)

You also need to be sure to maintain a full 32 bit copy of your parameters. This helps stability substantially.

We’ve developed a lightweight, open-source set of Pytorch tools to enable easier, more numerically stable mixed precision training: https://github.com/nvidia/apex. Mixed precision means that the majority of the network uses FP16 arithmetic (reducing memory storage/bandwidth demands and enabling Tensor Cores for gemms and convolutions), while a small subset of operations are executed in FP32 for improved stability.

Highlights include:

- Amp, a tool that executes all numerically safe Torch functions in FP16, while automatically casting potentially unstable operations to FP32. Amp also automatically implements dynamic loss scaling. Amp is designed to offer maximum numerical stability, and most of the speed benefits of pure FP16 training.

- FP16_Optimizer, an optimizer wrapper that automatically implements FP32 master weights for parameter updates, as well as static or dynamic loss scaling. FP16_Optimizer is designed to be minimally invasive (it doesn’t change the execution of Torch operations) and offer almost all the speed of pure FP16 training with significantly improved numerical stability.

- apex.parallel.DistributedDataParallel, a distributed module wrapper that achieves high performance by overlapping computation with communication during backward(). Apex DistributedDataParallel is useful for both pure FP32 as well as mixed precision training.

Full API documentation can be found here.

Our examples page demonstrates the use of FP16_Optimizer and Apex DistributedDataParallel. Amp examples are coming soon, and Amp’s use is thoroughly discussed in its README.

Give Apex a try and let us know what you think!

sorry for double post, the forum page told me “new users may only post 2 links at a time” or something along those lines.

The link to csarofeen/examples does not work any more. You can find an example here: Fp16 on pytorch 0.4

Hi thanks for your explanation.

May I ask why the BN must use float32, does that mean BN us different from other layers, like conv, linear, etc?

I’d say the easiest way to use and not make a mistake is to use PyTorch Lightning with

Trainer(use_amp=True).

This will train your model using 16-bit.

https://pytorch-lightning.readthedocs.io/en/0.6.0/trainer.html

Thanks @mcarilli. Apex was very useful to us in our project.

any suggestions on using float16 with transformers. Should I keep some layers in float32 just like batch-normalization is recommended to keep in float32?

I would generally recommend to use the automatic mixed precision package (via torch.cuda.amp), which uses casts the input to the appropriate dtype for each method.

okay thanks. Should we keep val_step under autocast scope as well for fair comparison between tr_loss & val_loss?

Yes, you can also use autocasting during the validation.

Especially if you plan on using it for the test dataset (or deployment) I would use it.

I used torch.cuda.amp tools to training an u-net-like network but my loss function gave NaN. I guess this is overflow’s problem when using fp16. Can you give me some advice to overcome this? Thank you so much!