Hello. So I have been doing transfer learning and followed a youtube tutorial but now when I look around to other documents and examples there is this thing very confusing and budging me. I used EfficientNet v1 B0 model and the default model when loaded as

model = timm.create_model(CFG.model_name,pretrained=True) and when i print model the last few layers are as: (conv_head): Conv2d(320, 1280, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn2): BatchNormAct2d(

1280, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

(drop): Identity()

(act): SiLU(inplace=True)

)

(global_pool): SelectAdaptivePool2d (pool_type=avg, flatten=Flatten(start_dim=1, end_dim=-1))

(classifier): Linear(in_features=1280, out_features=1000, bias=True)

)

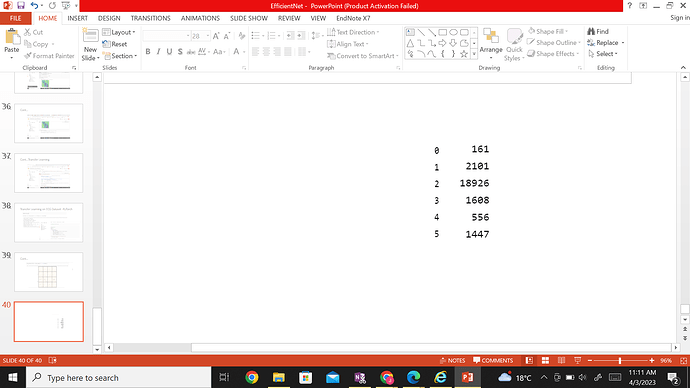

Now I have 6 class problem and I want to change last layer features =1000 to 6.

I did this by:

print(model.conv_stem)

model.conv_stem = nn.Conv2d(1, 32, kernel_size=(3, 3), stride=(2, 2), bias=False)

model.state_dict()[‘conv_stem.weight’] = model.state_dict()[‘conv_stem.weight’].sum(dim=1, keepdim=True)

print(model.conv_stem)

#let’s update the pretrained model:

for param in model.parameters():

param.requires_grad=False

#orginally, it was:

#(classifier): Linear(in_features=1280, out_features=1000, bias=True)

model.classifier = nn.Linear(1280, 6)

But this is what I learned but the youtube tutorial says:

model.classifier = nn.Sequential(

nn.Linear(in_features=1792, out_features=625), #1792 is the orginal in_features

nn.ReLU(), #ReLu to be the activation function

nn.Dropout(p=0.3),

nn.Linear(in_features=625, out_features=256),

nn.ReLU(),

nn.Linear(in_features=256, out_features=6)

)

I wonder which is correct and what is feature extractor and what significance sequential thing has? And how one can calculate or decide out_features=625???

Which method is correct whats the pros and cons?