I’m trying to do transfer learning on a pre-trained YOLOv3 implementation (GitHub - ultralytics/yolov3: YOLOv3 in PyTorch > ONNX > CoreML > TFLite as of now) so that instead of detecting the classic 80 coco classes it detects just 2 classes ‘related’ to ‘person’ (e.g., glasses / no glasses, hat / no hat). However I’m not being able to get the network to even properly overfit to my small dataset so I’m clearly doing something wrong and would be great if someone could point me in the right direction.

From my understanding of vanilla yolov3, there’s the darknet53 feature extractor, followed by the ‘yolo’ part of the network, of which layers 81, 93 and 105 (0-indexed) do the 1x1 convolutions that ultimately regress the boxes and assign class probabilities.

So I was expecting that I could:

-

annotate a small dataset with examples of the 2 classes I’m interested in. I did this with makesense.ai and have currently 200 example images with one or more instances of either classes per image. I used 70% for training and 30% for validation.

-

modify conv layers 81, 93 and 105, along with their respective ‘yolo’ ones (in the cfg file) to have the appropriate number of filters and classes: (5+2)*3 for conv; 2 for ‘yolo’.

-

require_grad = Falseon all parameters of the net other than the weights and biases for the conv layers mentioned in 2. -

weight initialization: I tried the following initialization schemes:

a- the darknet53.conv.74 file (i.e., only initializing the feature extractor trained on ImageNet)

b- initializing all weights from a pre-trained yolo3 (i.e., the whole network trained on coco)

c- same as b but then, for the layers in 1, I set all biases to 0 and all weights to xavier uniform. -

Finally I trained with the settings most repos implementing yolo use: 300 epochs, 16 batch-size, 320 image size, SGD/momentum and learn rate decay, etc.

I was hoping to overfit to my little dataset but the trained model doesn’t detect a single instance of my classes. Not even in the same images I trained on. So yeah, I’m doing something wrong. Maybe I need to do something about the 9 bounding boxes priors too? Any hints?

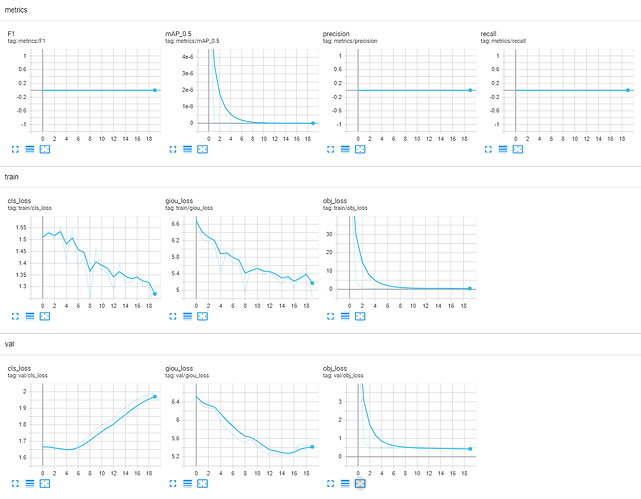

Here’s the training stats for case 3.a, which also look a bit off: