Hello,

it is possible to set params in layer before fully-connected layer to train params like case 2 in the table ?

thanks ![]()

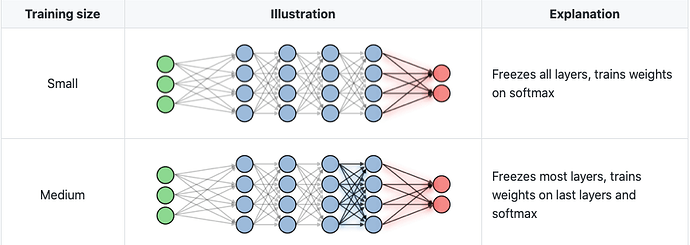

Yes, you can freeze specific layers and train others by manipulating the .requires_grad attribute of each parameter (setting it to False would freeze the parameter). The Finetuning tutorial would give you an example.