I have built a simple transformer classifier consisting of multiple TransformerEncoderLayers.

I have tried a variety of different values for hyperparameters (model dimension, number of heads, hidden size, number of layers, initial learning rate and batch size). I have also tried different dropout probabilities (0.2, 0.5 and 0.75). My vocabulary size is 100,000.

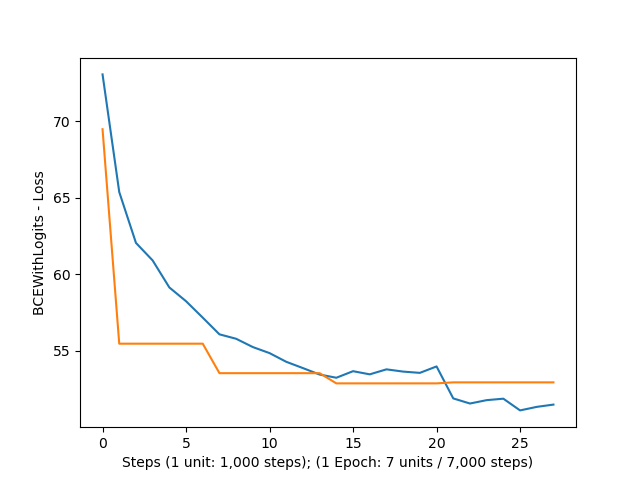

All different combinations have lead to a following loss pattern:

blue graph: training loss

orange graph: validation loss

Of course, they differ by a tiny amount, but the overall result has always been the same.

I have also tried another Dataset to make sure the data is not the problem, but the results were even worse.

The training loss just stays at about the same value from the 15th unit on until the next epoch starts from unit 22 on, where the training loss suddenly decreases by about 0.02, but then stays the same again for the entire epoch.

The validation loss does not change significantly from unit 15 on.

It looks very much like overfitting, but it seems quite strange too me, that the model starts overfitting that early with such a bad loss.

I appreciate any hints or help, if somebody has already experienced something like this.

Regards,

Unity05