Hi everyone,

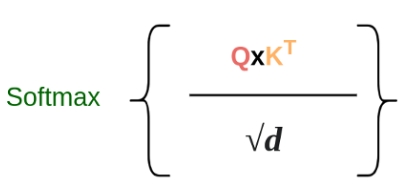

I am trying to experiment with Transformers. After training, I would like to visualize the contribution matrix from an input. If I understood correctly, the contribution matrix is a square one, indicating the contribution of each element with other elements from the sequence (diagonal values are high). This matrix is computed as in the linked picture, Q and K being respectively the query and key matrix, and d the number of features of each element.

Is there a way to easily get the result of this operation in order to visualize this contribution matrix?

I think that these contribution matrices can be obtained from the TransformerEncoderLayer. So, in the function at line 350 in this file: pytorch/torch/nn/modules/transformer.py at main · pytorch/pytorch · GitHub, I call the function self.self_attn with parameter need_weights=True, average_attn_weights=False, and then save the output_weights as tensors. Is this the right way ?

Thanks for the help