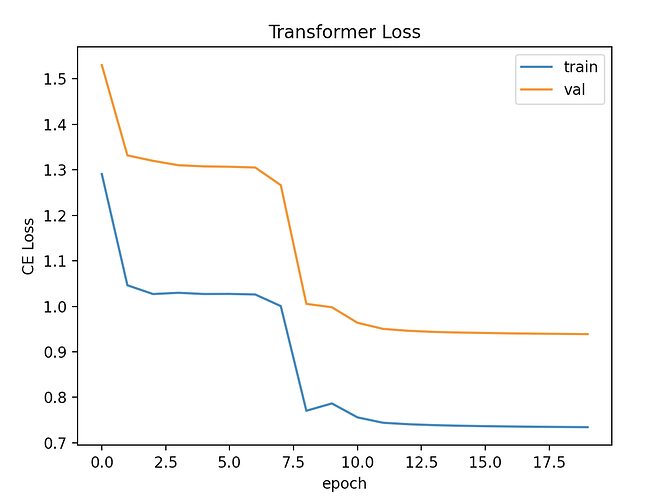

I am training a transformer model I wrote from scratch for machine translation, and debugging with a very small data set (1000 sentences for training, 200 for dev). With 20 epochs and batch size of 64, the loss of the training and dev set follow the exact same pattern. Seems kind of strange. Anyone know what’s going on here? (attached training loop below as well).

mask = torch.tril(torch.ones((MAX_LENGTH, MAX_LENGTH)))

# optimization loop

optimizer=torch.optim.Adam(params=model.parameters(),lr=1e-3)

loss_fn = torch.nn.CrossEntropyLoss()

train_losses = []

val_losses = []

for epoch in range(1,EPOCHS+1):

# train loop

for i, (src,trg) in enumerate(train_data):

# place tensors to device

src = torch.Tensor(src).to(DEVICE).long()

trg = torch.Tensor(src).to(DEVICE).long()

# forward pass

out = model(src,trg, mask)

# compute loss

train_loss = loss_fn(out.view(-1,tgt_vocab), trg.view(-1))

# backprop

optimizer.zero_grad()

train_loss.backward()

# update weights

optimizer.step()

val_loss = 0

num_batches = len(dev_data)

for i, (src, trg) in enumerate(dev_data):

# place tensors on device

src = torch.Tensor(src).to(DEVICE).long()

trg = torch.Tensor(src).to(DEVICE).long()

# forward pass

out = model(src, trg, mask)

# compute loss

loss_val = loss_fn(out.view(-1,tgt_vocab),trg.view(-1))

val_loss += loss_val.item()

val_loss /= num_batches

val_losses.append(val_loss)

train_losses.append(train_loss.item())

print(f'Epoch[{epoch}/{EPOCHS}] train_loss: {train_loss.item()} val_loss: {val_loss}')