Data(face=[3, 43517], pos=[43812, 3])

how could transfrom this data

What kind of transformation would you like to use?

It seems you are dealing with some kind of features or coordinates?

i am dealing with mesh data, i have done first step by use .numpy.

Now, i need to reshape data into following form.

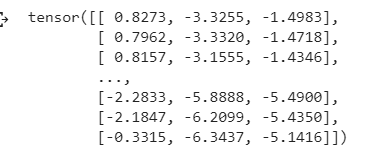

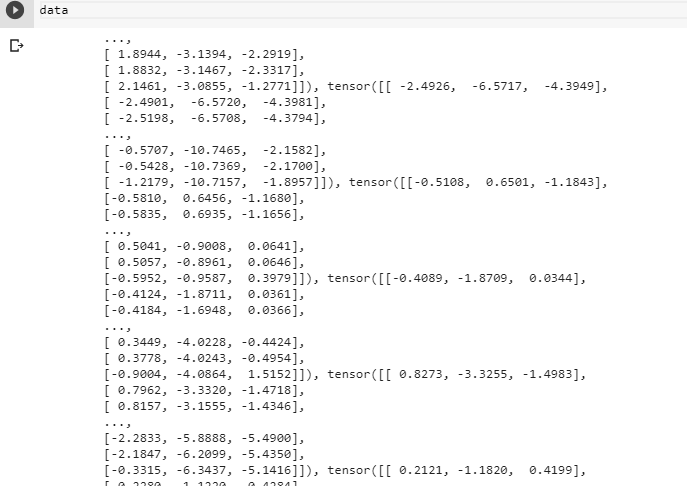

this is my data, it is really similar to the data in pretrained model. however, it has no shape and look like this:

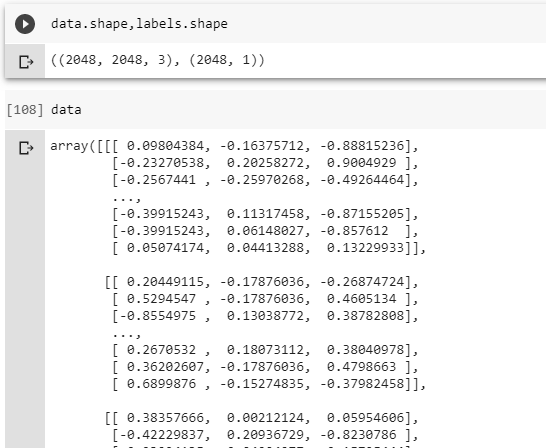

i just check the datatype, i want to reshape this turple into ndarray, the single array in my data is the array in pretrained model

Since d is a tuple, you could index it and get the shapes of all internal arrays via:

for tmp in d:

print(tmp.shape)

Could you post these shapes, so that we can see how to reshape these arrays?

the shape of pretrained model:

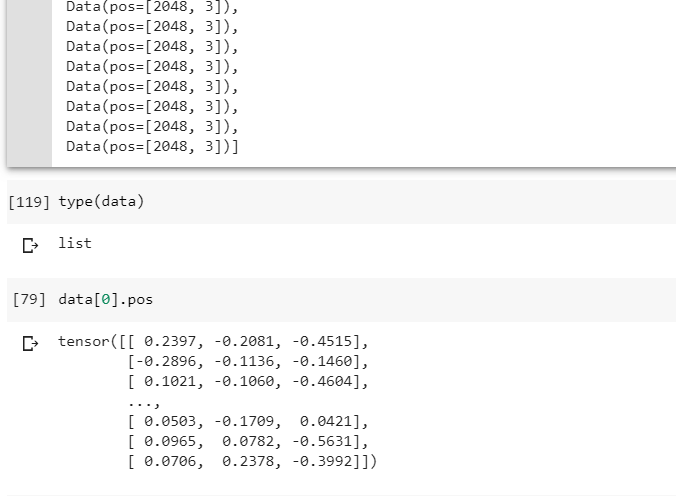

Sorry, my data turns out to be list.

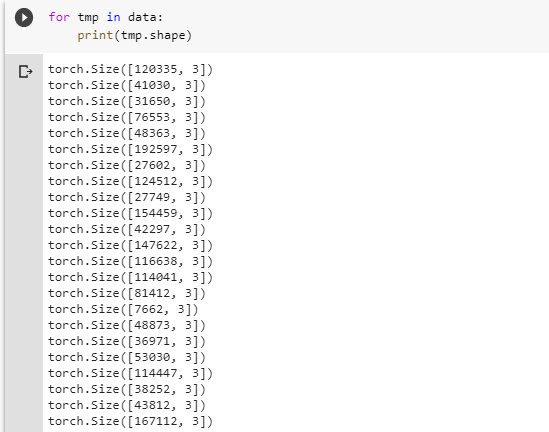

it works with ur code as well, this my shape, each data have different numbers of clound points.

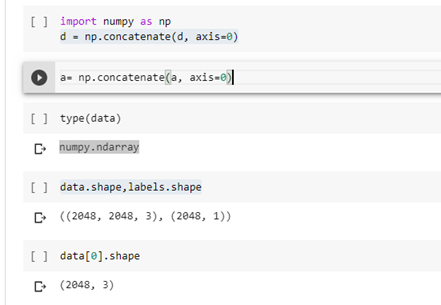

data seems to be a numpy array, not a list, since type(data) returns numpy.ndarray and also data.shape seems to work, while it was raising an error before, so apparently something in the code has changed.

Anyway, tmp seems to have varying shapes, so you won’t be able to reshape it to e.g. a tensor with the shape [2048, 2048, 3], but would need to interpolate, slice, etc. the tensors somehow.

Could you explain your use case a bit, i.e. why would you need to reshape all tensors to a particular shape and how this reshaping should be done, if the number of elements doesn’t match?

PS: you can post code snippets by wrapping them into three backticks ```, which makes debugging easier.

hey, i do not need shape to shape exactly like [2048, 2048, 3] , However, i need to shape it to 3 dimension [a, b, 3] so that i can implement a graph-classifcation modelhttps://github.com/aboulch/ConvPoint/blob/master/examples/modelnet/modelnet_classif.py.

Forward pass of this model have (x, inputs_pts) which has form: input_pts.shape = [BatchSize, Dim, NPoints] and x.shape = [BatchSize, C, NPoints]

i was trying to load data exactly like pretrained model, so i can run this classfication without further concern. However, as shape varing for each single object. i do not know how to do it.

But here comes now a another solution: i have now data.pos with shape[Points.Dim] and x[C, Npoints], all i need to do is add to 3d dimension. Still i can not concatnate,of np.stock, cuz different dimension.

, i truely wish u could help me further, after transfrom my data, it become a list like this, if i do data[0].pos, it will have shape(2048,3). how do i turn all data to array then np.stack it

, i truely wish u could help me further, after transfrom my data, it become a list like this, if i do data[0].pos, it will have shape(2048,3). how do i turn all data to array then np.stack it