Please, I am trying to train a LSTM but I don’t know where it is failing. I have the error:

ModuleAttributeError: ‘LSTM’ object has no attribute ‘hidden_size’.

Full code in Colab: https://colab.research.google.com/drive/1nHODrwukJZYMMnH-fkMTLlvA9o1ihv-r?usp=sharing

``

class LSTM(nn.Module):

def init(self, input_size=1, hidden_size=100):

super().init()

self.hidden= None

self.lstm1 = nn.LSTM(

input_size=input_size,

hidden_size=hidden_size,

num_layers=1,

batch_first=True,

bidirectional=False,

)

self.lstm2 = nn.LSTM(

hidden_size,

hidden_size * 2,

num_layers=1,

batch_first=True,

bidirectional=False,

)

self.fc = nn.Linear(hidden_size * 2, 1)

def forward(self, x):

x, _ = self.lstm1(x)

x, _ = self.lstm2(x)

x = self.fc(x)

return x

´´

Hy, the error is generated at this line.

model.hidden = (torch.zeros(1,1,model.hidden_size).cuda(),

torch.zeros(1,1,model.hidden_size).cuda())

Right then you should modify your LSTM class like this.

class LSTM(nn.Module):

def init(self, input_size=1, hidden_size=100):

super(LSTM,self).__init__()

self.hidden_size = hidden_size

self.hidden= None

Should do

Thanks a million @Usama_Hasan for your help. After changing it, the performance is very poor as you can see in this link:https://colab.research.google.com/drive/1Q7q0dGTylMYMji1E3M104r0149xc9dbh?usp=sharing

I don’t have clear yet the difference between hidden and hidden_size attributes of the model. I am new in this topic, sorry.

Could you recommend me any good tutorial available on the web for this or to improve my knowledge on LSTMs for Time-Series?

Thanks.

Sure,

You can check my earlier post on this.

Thanks @Usama_Hasan. The multivariate approach is just what I need. Great info. I will have a look on it today and will get back to you if I have more questions. Thanks again for your support.

Dear @Usama_Hasan, thanks for the information that you provided to me. It is great. However, I still have some questions.

1.- I still do not have clear the differences between hidden and hidden_size in the model.

Complete code in: Google Colab

class LSTM(nn.Module):

def __init__(self, input_size=1, hidden_size=100):

super().__init__()

self.hidden= None

self.hidden_size=hidden_size

self.lstm1 = nn.LSTM(

input_size=input_size,

hidden_size=hidden_size,

num_layers=1,

batch_first=True,

bidirectional=False,

)

self.lstm2 = nn.LSTM(

hidden_size,

hidden_size * 2,

num_layers=1,

batch_first=True,

bidirectional=False,

)

self.fc = nn.Linear(hidden_size * 2, 1)

def forward(self, x):

x, _ = self.lstm1(x)

x, _ = self.lstm2(x)

x = self.fc(x)

return x

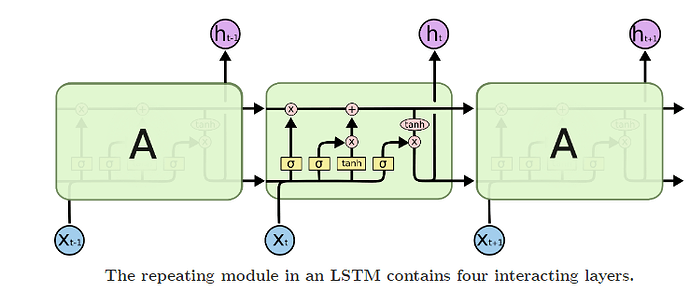

2.- Why is the c_n part of the model not used in the second LSTM? Like here

https://colah.github.io/posts/2015-08-Understanding-LSTMs/

Thanks in advance.