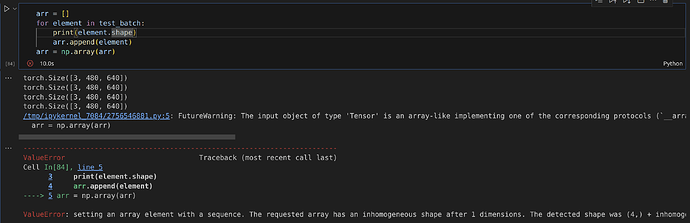

Hello, lately I tried to train a neural network using Sequential and DataLoader. Data loader returns a tuple with length=4 in which every element is an image as torch.Tensor. Because I can’t pass tuple but Tensor as an input to the neural network I tried converting it by firstly making it a numpy array and then converting it to Tensor. However, when trying to convert to numpy array I get a following error: ValueError: setting an array element with a sequence. The requested array has an inhomogeneous shape after 1 dimensions. The detected shape was (4,) + inhomogeneous part. Each tensor has the same shape as shown in the image below.

Is this expected behaviour with tensors as tuple elements? What is interesting is that the same code works just fine in google colab. A workaround I found is to firstly convert every element of tuple to numpy array and then convert whole thing to tensor.