Good afternoon!

I have questions about the following tutorial:

https://pytorch.org/tutorials/beginner/data_loading_tutorial.html

I have a similar dataset (images + landmarks). I’ve built the custom dataloader following the tutorial and checked the types of dataloader components (torch.float64 for both images and landmarks).

Then I applied the dataloader to the classification model with this training class:

class Trainer():

def __init__(self,criterion = None,optimizer = None,schedular = None):

self.criterion = criterion

self.optimizer = optimizer

self.schedular = schedular

def train_batch_loop(self,model,train_dataloader):

train_loss = 0.0

train_acc = 0.0

for images,landmarks, labels in train_dataloader:

images = images.to(device)

landmarks = landmarks.to(device)

labels = labels.to(device)

self.optimizer.zero_grad()

I won’t be elaborating further because the training crushes at images = images.to(device) with the following error: AttributeError: ‘str’ object has no attribute 'to’

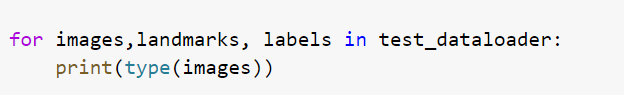

I don’t understand where this string is coming from if all the dataloader components are torch.float64.

I went back to check the initial data: in the tutorial, the landmarks are summarized in a pandas dataframe with landmark values as int64 and image name as “object”.

In my summary dataframe image name is an “object” as well and landmarks are numpy.float64. Again, no strings anywhere…

I will share the code for creating a summary datatable in the first comment.

Appreciate any ideas!