Hi all,

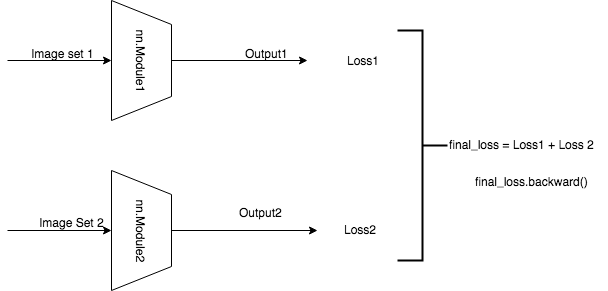

So here is my network architecture as shown in below image.

I’ve two separate networks and loss_1 and loss_2 are coming from two separate nn.Modules networks and final_loss = loss_1 + loss_2 .

Still only one final_loss.backward() would calculate grad in both networks?

I do have two separate optimizers to update grads in two separate networks respectively.

If not so, then what is the correct way to do back propagation in these individual networks on combined loss value.