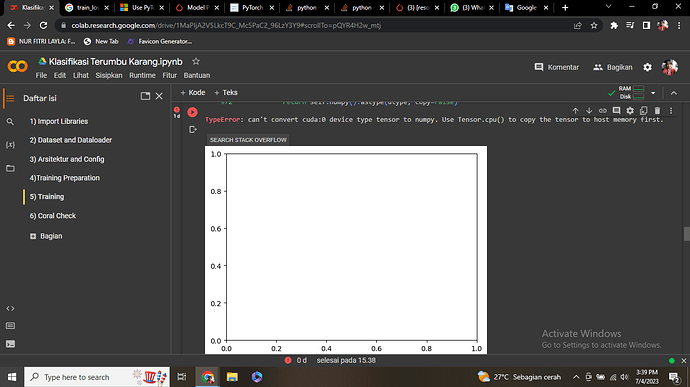

Hello miss, i have the same problem that cant view the train_loss cause i used cude. What should i do for fixed this error. Please help me.

from torch.autograd import Variable

#Model Training and saving best model

best_accuracy=0.0

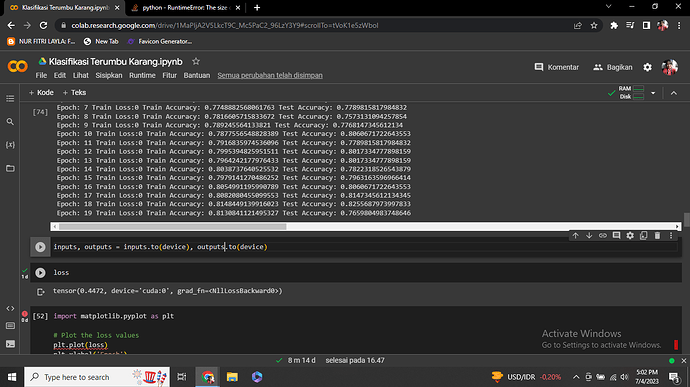

for epoch in range(num_epochs):

# Evaluation and training on training dataset

model.train()

train_accuracy=0.0

train_loss=0.0

for i, (inputs, labels) in enumerate (trainloader):

if torch.cuda.is_available():

inputs = Variable(inputs.cuda())

labels = Variable(labels.cuda())

optimizer.zero_grad()

outputs=model(inputs)

loss=loss_function(outputs,labels)

loss.backward()

optimizer.step()

train_loss += loss.cpu().data*inputs.size(0)

_,prediction=torch.max(outputs,1)

train_accuracy+= int(torch.sum(prediction==labels.data))

train_accuracy=train_accuracy/train_count

train_loss=train_loss/train_count

# Evaluation on testing dataset

model.eval()

test_accuracy=0.0

for i, (inputs, labels) in enumerate (testloader):

if torch.cuda.is_available():

inputs = Variable(inputs.cuda())

labels = Variable(labels.cuda())

outputs=model(inputs)

_,prediction=torch.max(outputs.data,1)

test_accuracy+=int(torch.sum(prediction==labels.data))

test_accuracy=test_accuracy/test_count

print(' Epoch: '+str(epoch)+' Train Loss:'+str(int(train_loss))+' Train Accuracy: '+str(train_accuracy)+ ' Test Accuracy: '+str(test_accuracy))

# Save the best model

if test_accuracy>best_accuracy:

torch.save(model.state_dict(), 'best_checkpoint.model')

best_accuracy=test_accuracy

Hlw can any one help me to solve the error loss_array = np.array(metric_dict[‘loss’]).mean(0)

File “C:\Users\QUMLG.conda\envs\sumon2\lib\site-packages\numpy\core_methods.py”, line 151, in _mean

ret = umr_sum(arr, axis, dtype, out, keepdims)

File “C:\Users\QUMLG.conda\envs\sumon2\lib\site-packages\torch\tensor.py”, line 492, in array

return self.numpy()

TypeError: can’t convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.

I tried to put it in to cpu but it says dict object has no attribute cpu

Try to index the tensor inside the dict and call .cpu() on it.

Assuming metric_dict is the dict and metric_dict["loss"] is a tensor, use loss_array = metric_dict["loss"].detach().cpu().numpy().

Its says dict object no attribute detach, I am using pytorch 1.5

In that case metric_dict["loss"] seems to return another dict and you should index it again until the tensor is returned, which you could then detach.

Thank you sir, My loss metric is like this

Loss of metric [[array(7.0471724e-05, dtype=float32),array(2.9985833e-05, dtype=float32), array(1.5520713e-05, dtype=float32), array(1.4465119e-05, dtype=float32)], [array(2.7676444e-05, dtype=float32), array(1.40491e-05, dtype=float32), array(1.3627343e-05, dtype=float32)], [array(2.530995e-05, dtype=float32), array(9.145156e-06, dtype=float32), array(1.6164795e-05, dtype=float32)]] how can i put all of them in cpu

array indicates that you might already be using numpy arrays, so there might be no need to move anything around. Check the type of each entry.

But why I encountered cuda error. I am using huge 1.3 million csv dataset and 16 gb Nvidia gpu

I don’t know since your error message doesn’t match the posted output. The input might depend on the actual iteration etc. so you would need to narrow down why and when numpy arrays are used and when tensors.

Hello , I have the same issue, did you reslove it?