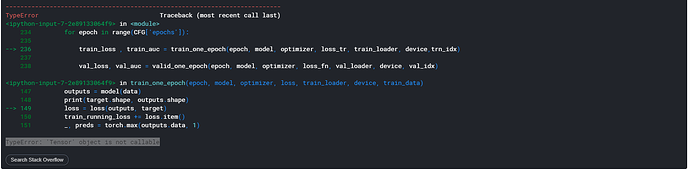

I don’t know what is actual error. Why is it showin:

Here is My Script:

def train_one_epoch(epoch, model, optimizer,loss, train_loader, device, train_data):

print('Training')

model.train()

train_running_loss = 0.0

train_running_correct = 0

for i, data in tqdm(enumerate(train_loader), total=int(len(train_data)/train_loader.batch_size)):

data, target = data[0].to(device), data[1].to(device)

optimizer.zero_grad()

outputs = model(data)

print(target.shape, outputs.shape)

loss = loss(outputs, target)

train_running_loss += loss.item()

_, preds = torch.max(outputs.data, 1)

train_running_correct += (preds == target).sum().item()

loss.backward()

optimizer.step()

train_loss = train_running_loss/len(train_loader.dataset)

train_accuracy = 100. * train_running_correct/len(train_loader.dataset)

return train_loss, train_accuracy

for fold, (trn_idx, val_idx) in enumerate(folds):

if fold>0:

break

print('Training with {} started'.format(fold))

print(len(trn_idx), len(val_idx))

train_loader, val_loader = prepare_dataloader(train, trn_idx, val_idx, data_root=TRAIN_PATH)

device = torch.device(CFG['device'])

model = CassvaImgClassifier(CFG['model_arch'], train.label.nunique(), pretrained=True).to(device)

scaler = GradScaler()

optimizer = torch.optim.Adam(model.parameters(), lr=CFG['lr'], weight_decay=CFG['weight_decay'])

#scheduler = torch.optim.lr_scheduler.StepLR(optimizer, gamma=0.1, step_size=CFG['epochs']-1)

scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0=CFG['T_0'], T_mult=1, eta_min=CFG['min_lr'], last_epoch=-1)

#scheduler = torch.optim.lr_scheduler.OneCycleLR(optimizer=optimizer, pct_start=0.1, div_factor=25,

# max_lr=CFG['lr'], epochs=CFG['epochs'], steps_per_epoch=len(train_loader))

loss_tr = torch.nn.CrossEntropyLoss()#MyCrossEntropyLoss().to(device)

loss_fn = torch.nn.CrossEntropyLoss()

valdition_loss = 99999

for epoch in range(CFG['epochs']):

train_loss , train_auc = train_one_epoch(epoch, model, optimizer, loss_tr, train_loader, device,trn_idx)

val_loss, val_auc = valid_one_epoch(epoch, model, optimizer, loss_fn, val_loader, device, val_idx)

print(f"Train Loss: {train_loss:.4f}, Train Acc: {train_auc:.2f}")

print(f'Val Loss: {val_loss:.4f}, Val Acc: {val_auc:.2f}')

if val_loss<validation_loss:

torch.save(model.state_dict(),'{}_fold_{}_{}'.format(CFG['model_arch'], fold, epoch))