Hello,

I got the following error while training my model and got stuck.

TypeError: 'Tensor' object is not callable

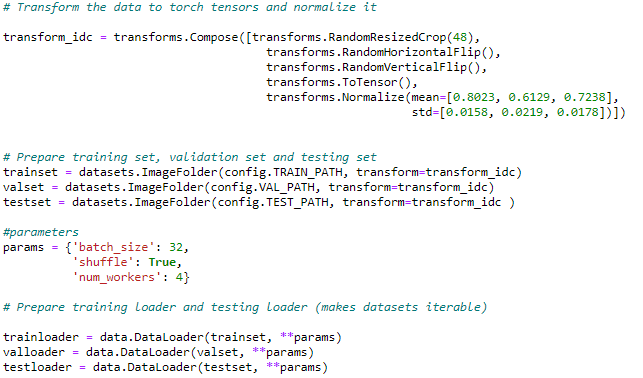

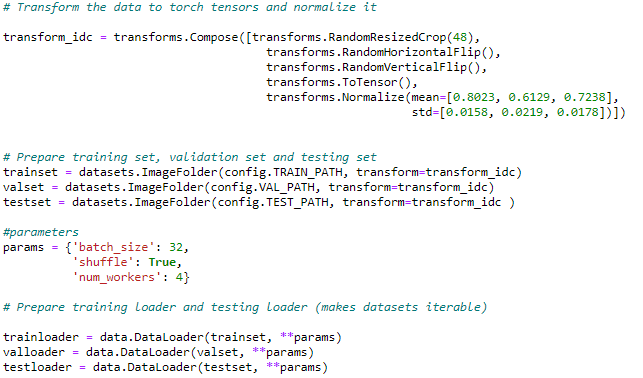

loaders:

model:

helper functions to train and validate

training loop and error log:

Hello,

I got the following error while training my model and got stuck.

TypeError: 'Tensor' object is not callable

loaders:

model:

helper functions to train and validate

training loop and error log:

Hi,

The problem is the way you defined criterion. line 6 in last image or first line of method fit.

You need to pass a class object like criterion = torch.nn.BCELossWithLogits() note that you dont need to pass input/output at the time of definition.

Also it seems you have defined a custom method called binary_cross_entropy for criterion. The problem is from this method.

It would be great if you could share the code using text instead of image and wrapping it around a pair of ``` for better interaction.

Bests

Thank you so much for the swift input. Thank you this seems to address the original type error - tensor object is not callable, now I am getting the error - RuntimeError: The size of tensor a (2) must match the size of tensor b (32) at non-singleton dimension 1.

I used the the following class object;

#code source: Feature Request: Add BCELossWithLogits to replace BCELoss for numerical stability. · Issue #751 · pytorch/pytorch · GitHub

class StableBCELoss(nn.modules.Module):

def __init__(self):

super(StableBCELoss, self).__init__()

def forward(self, input, target):

neg_abs = - input.abs()

loss = input.clamp(min=0) - input * target + (1 + neg_abs.exp()).log()

return loss.mean()

It looks like the issue is continued to be the way criterion is implemented, may not be tensor size/dimension. My codes seems to be infested with bugs, any additional help is highly appreciated. Thank you.

Here is the codes in text:

Define function to train a batch of IDC images

def train(trainloader, model, criterion, optimizer, scheduler):

total_loss = 0.0

size = len(trainloader.dataset)

num_batches = size // trainloader.batch_size

model.train()

for i, (images, labels) in enumerate(trainloader):

print(f"Training: {i}/{num_batches}", end="\r")

scheduler.step()

images = images.to(device)

labels = labels.to(device)

optimizer.zero_grad()

outputs = model(images) # forward pass

loss = criterion(outputs, labels)

total_loss += loss.item() * images.size(0)

loss.backward() # backprogagation

optimizer.step()

return total_loss / size

Define function to compute the accuracy on the validation set

def validate(valloader, model, criterion):

model.eval()

with torch.no_grad():

total_correct = 0

total_loss = 0.0

size = len(valloader.dataset)

num_batches = size // valloader.batch_size

for i, (images, labels) in enumerate(valloader):

print(f"Validation: {i}/{num_batches}", end="\r")

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

loss = criterion(outputs, labels)

_, preds = torch.max(outputs, 1)

total_correct += torch.sum(preds == labels.data)

total_loss += loss.item() * images.size(0)

return total_loss / size, total_correct.double() / size

main training loop

target_size=torch.rand((48,48), requires_grad=False)

input_size=torch.rand((48,48), requires_grad=False)

def fit(model, num_epochs, trainloader, valloader):

#criterion = WeightedBCELoss()

criterion = StableBCELoss()

optimizer = Adagrad(model.parameters(), lr=lr,lr_decay=lr/num_epochs)

scheduler = OneCycleLR(optimizer, lr_range=(lr,1.), num_steps=1000)

print("epoch\ttrain loss\tvalid loss\taccuracy")

for epoch in range(num_epochs):

train_loss = train(trainloader, model, criterion, optimizer, scheduler)

valid_loss, valid_acc = validate(valloader, model, criterion)

print(f"{epoch}\t{train_loss:.5f}\t\t{valid_loss:.5f}\t\t{valid_acc:.3f}")

There is a built-in function for BCELoss exactly as I written. Do not need to implement it yourself as if you look at the issue it is for 2017 and it is closed. See this thread about it BCELoss vs BCEWithLogitsLoss.

About the error, your model’s output has shape [batch_size, 2] and I don’t know about the labels but based on error, your labels have shape []batch_size, 25]. Can you explain the labels?