Im new in these area. So i have too many defiencies. Firstly i say to sorry for easy questions. i can not find on internet. Can anybody tell me this codes:

#how can unsqueeze add batch dimensions. what is the meaning of 0 and [:3,:,:] what is this?

image = in_tansform (image)[:3,:,:].unsqueeze(0)

#how this code match size. i dont understan shape[-2:] means? i now we used -1 for but i firstly see -2 ?

style = load_image('/hockney.jpg', shape=content.shape[-2:]).to(device)

.unsqueeze(0) adds an additional dimension to the passed axis. In this case a dimension in position 0 will be added, which is usually the batch dimension.

[:3, :, :] slices the tensor in dim0 from index 0 to 2 inclusive. This might be necessary, if your in_transform returns a tensor with more than 3 channels (e.g. with an additional alpha channel).

.shape[-2:] returns the last two values of the shape. I guess content might be an image. If that’s the case, you’ll get the height and width using this operation.

Note that the default image tensor format is [channels, height, width].

I’m not familiar with the Udacity lessons, so I just assumed you are dealing with some kind of image classification task.

Let me know, if you need more information.

It is Style Transfer lesson in “Intro to Deep Learning with PyTorch”. Thank you so much. I finished lessons and i am trying examples now. But i missed something and cant find on internet. Maybe somebody who is like me doesn’t understand same lines and this topic can help them. Can i ask in this title? So it looks somebody can can help us, as you do. Moderator thank you again.

Sure, if you need some more explanations/information regarding PyTorch code, feel free to post it here.

I’m trying to create mini batches in Udacity lesson. I will make character-wise RNN. But i missed something in this codes.

def get_batches(arr, batch_size, seq_length):

'''Create a generator that returns batches of size

batch_size x seq_length from arr.

Arguments

---------

arr: Array you want to make batches from

batch_size: Batch size, the number of sequences per batch

seq_length: Number of encoded chars in a sequence

'''

batch_size_total = batch_size * seq_length

# total number of batches we can make

n_batches = len(arr)//batch_size_total

# Keep only enough characters to make full batches

arr = arr[:n_batches * batch_size_total]

# Reshape into batch_size rows

arr = arr.reshape((batch_size, -1))

# iterate through the array, one sequence at a time

for n in range(0, arr.shape[1], seq_length):

# The features

x = arr[:, n:n+seq_length]

# The targets, shifted by one

y = np.zeros_like(x)

try:

y[:, :-1], y[:, -1] = x[:, 1:], arr[:, n+seq_length]

except IndexError:

y[:, :-1], y[:, -1] = x[:, 1:], arr[:, 0]

yield x, y

i missed all iteration throught the array line.

1- what is the try, expcept and yield ?

2- why we iterate loop with range(0, arr.shape[1], seq_length) ? what is the meaning?

3- x = arr[:, n:n+seq_length] what does this line do? first index : means we look all rows(batches) but for columns why we write n:n + seq_length what is it?

4- y[:, :-1], y[:, -1] = x[:, 1:], arr[:, n+seq_length] i saw this line shift the x array. but how does it do ?

5- y[:, :-1], y[:, -1] = x[:, 1:], arr[:, 0] and my last question what is difference from upper code. they said this line last y index turns start of first index of array? how does it work?

I know my question sometimes looks weird question. Can anyone explain this questions, i will be pleased because im beginner in this area. ps. sorry for my bad english. @ptrblck

As I said I’m not familiar with the Udacity course, but I will try to answer your questions based on the code snippet you’ve provided.

-

A

try ... exceptblock runs some code in thetrysection. If an exception is throws (e.g. due to an indexing error), theexceptsection will be executed. Usually you could provide a nice error message based on the thrown exception. In your case it looks like the code tries to indexarrat positionn+seq_length. If this index is out of bounds, the first elementarr[:, 0]will be used instead.

You could get the same behavior with a plain if condition and check ifn+seq_lengthis too large forarr.size(1). You can find more info in the official Python docs

yieldis a keyword in python, which is similar to areturnstatement, but will continue after theyieldline of code for the next call. You can createGeneratorswith it, which is explained with some examples here. -

rangedefines a loop with a start index, end index, and step size. In your examplenwill advance byseq_lengthin each iteration:

for n in range(0, 10, 2):

print(n)

>

0

2

4

6

8

- You are slicing

arrindim1fromnton+seq_length. Assumingn=5andseq_length=2, you’ll getarr[:, 5:8], i.e. all rows and the columns[5, 6, 7]. - You are using slicing to shift

xby one and assign it toy.y[:, :-1]addresses all values to the last but one value indim1, whilex[:, 1:]addresses all values from index1 to the end. Here is a small example:

x = torch.arange(5).float()

y = torch.zeros(5)

y[:-1] = x[1:]

print(x)

> tensor([0., 1., 2., 3., 4.])

print(y)

>tensor([1., 2., 3., 4., 0.])

y[:, -1] = arr[:, n+seq_length] will assign arr[:, n+seq_length] to the last value in y in dim1.

- If

n+seq_lengthruns out of bounds, you’ll just assign the first value ofarrindim1(arr[:, 0]) to the last value ofy.

Most of these issues are not really PyTorch related and might be looked up quite easily on a Python related site.

Also don’t worry about your English. We’ll ask, if something is incomprehensible.

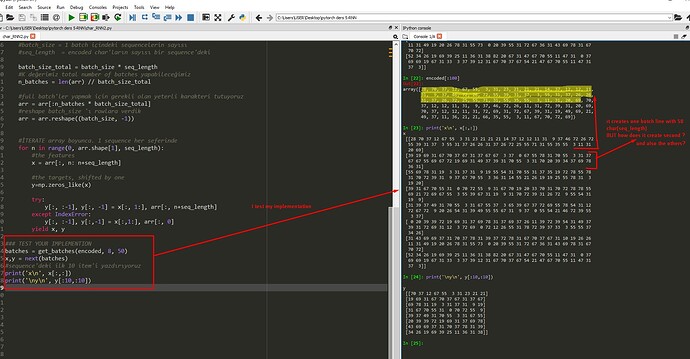

Thanx for answering me. I will read and check what i missed. I know maybe you are right. i must look python site. But my last 2 lesson in RNN and i dont wanna missed creating batch. Because lecturer said it always comes with problems later. So i disturb you few ![]() Maybe this screenshot also helps what i did? And you can see how i confused? can you explain it ?

Maybe this screenshot also helps what i did? And you can see how i confused? can you explain it ?![]()

Could you try to call encoded = encoded.contiguous() before passing it to get_batches or use arr.view instead of reshape?

I just would like to see the results then.

First of all, thank you so much sir! you answered my 5 question clearly and it was awesome. But i see it was really hard to work with batches, i understand more than yesterday. In this example too many array data flow and i try to understand completely.

Secondly, i try to call encoded = encoded.contiguous() but i get AttributeError: ‘numpy.ndarray’ object has no attribute ‘contiguous’ error and also arr.view has TypeError: data type not understood. i really don’t catch how we get second row of batch. i printed 1000 character of encoded line and search. but i couldnt match. and also why i got this 2 error. i can understand arr.view error it is different from reshape. but why does the contiguous have an error. now i try to understand why you offer contiguous and i lost in stackoverflow PyTorch - contiguous()

This is my all code. I think i missed something.

import numpy as np

import torch

from torch import nn

import torch.nn.functional as F

with open('anna.txt', 'r') as f:

text = f.read()

text[:100]

#encode text map character to integer

chars = tuple(set(text))

int2char = dict(enumerate(chars))

char2int = {ch: ii for ii, ch in int2char.items()}

#encode text

encoded = np.array([char2int[ch] for ch in text])

encoded[:100]

'''ONE HOT ENCODE'''

def one_hot_encode(arr, n_labels):

# Initialize the the encoded array

one_hot = np.zeros((np.multiply(*arr.shape), n_labels), dtype=np.float32)

# Fill the appropriate elements with ones

one_hot[np.arange(one_hot.shape[0]), arr.flatten()] = 1.

# Finally reshape it to get back to the original array

one_hot = one_hot.reshape((*arr.shape, n_labels))

return one_hot

#check function works

test_seq = np.array([[2,7,1]])

one_hot = one_hot_encode(test_seq, 8)

print(one_hot)

type(one_hot)

'''CREATING BATCHES'''

def get_batches(arr, batch_size, seq_length):

#bu batch_size X seq_length lik arrayler oluşturur arr'dan

# arr = batch'lere ayrılacak dizi

#batch_size = 1 batch içindeki sequencelerin sayısı

#seq_length = encoded char'ların sayısı bir sequence'deki

batch_size_total = batch_size * seq_length

#K değerimiz total number of batches yapabileceğimiz

n_batches = len(arr) // batch_size_total

#full batch'ler yapmak için gerekli olan yeterli karakteri tutuyoruz

arr = arr[:n_batches * batch_size_total]

#reshape batch_size 'ı rowlara verdik

arr = arr.reshape((batch_size, -1))

#İTERATE array boyunca. 1 sequence her seferinde

for n in range(0, arr.shape[1], seq_length):

#the features

x = arr[:, n: n+seq_length]

#the targets, shifted by one

y=np.zeros_like(x)

try:

y[:, :-1], y[:, -1] = x[:, 1:], arr[:, n+seq_length]

except IndexError:

y[:, :-1], y[:,-1] = x[:,1:], arr[:, 0]

yield x, y

### TEST YOUR IMPLEMENTION

batches = get_batches(encoded, 8, 50)

x,y = next(batches)

#sequence'deki ilk 10 item'i yazdırıyoruz

print('x\n', x[:,:])

print('\ny\n', y[:10,:10])

print('\nencoded\n', encoded[:1000])

It was my mistake as I assumed encoded was a tensor, but apparently it it a numpy array, which does not have these methods.

Have a look at these debug outputs to see, how the array is being reshaped and how x and y are created:

def get_batches(arr, batch_size, seq_length):

#bu batch_size X seq_length lik arrayler oluşturur arr'dan

# arr = batch'lere ayrılacak dizi

#batch_size = 1 batch içindeki sequencelerin sayısı

#seq_length = encoded char'ların sayısı bir sequence'deki

batch_size_total = batch_size * seq_length

#K değerimiz total number of batches yapabileceğimiz

n_batches = len(arr) // batch_size_total

#full batch'ler yapmak için gerekli olan yeterli karakteri tutuyoruz

arr = arr[:n_batches * batch_size_total]

#reshape batch_size 'ı rowlara verdik

arr = arr.reshape((batch_size, -1))

print('arr after reshape\n', arr)

#İTERATE array boyunca. 1 sequence her seferinde

for n in range(0, arr.shape[1], seq_length):

#the features

x = arr[:, n: n+seq_length]

#the targets, shifted by one

y=np.zeros_like(x)

try:

y[:, :-1], y[:, -1] = x[:, 1:], arr[:, n+seq_length]

except IndexError:

y[:, :-1], y[:,-1] = x[:,1:], arr[:, 0]

yield x, y

arr = np.arange(500)

print('result\n', next(get_batches(arr, 8, 10)))

As you can see, arr is being reshaped in a continuous manner and then sliced, such that these gaps are created.