I am trying to deploy a image classification model on server using fastapi.

as such I have two questions related to my code. In the original code(which wasn’t being run on server),I would read an image using opencv,convert it from BGR to RGB.Not doing this conversion would give me inaccurate results at test time.

In the fast api code my image is being read as follows

def read_image(payload):

stream=BytesIO(payload)

image=np.asarray(bytearray(stream.read()),dtype="uint8")

image=cv2.imdecode(image,cv2.IMREAD_COLOR)

if isinstance(image,np.ndarray):

img=Image.fromarray(image)

return img

The second issue I am facing is with the post method when I run the server,and enter the link http:127.0.0.1:9999/ the get method is running which prints the following message

Welcome to classification server

However when I execute the post method shown below

@app.post("/classify/")

async def classify_image(file:UploadFile=File(...)):

#return "File Uploaded."

image_byte=await file.read()

return classify(image_byte)

When i go to the link http:127.0.0.1:9999/classify/ I end up recieivng the error

method not allowed

Any reasons on why this is happening and what can be done to fix the error. The full code is listed below.If there are any errors that i am missing in this,please let me know.I am new to fastapi and as such I am really confused about this.

from fastapi import FastAPI, UploadFile, File

import uvicorn

import torch

import torchvision

from torchvision import transforms as T

from PIL import Image

from build_effnet import build_model

import torch.nn.functional as F

import io

from io import BytesIO

import numpy as np

import cv2

app = FastAPI()

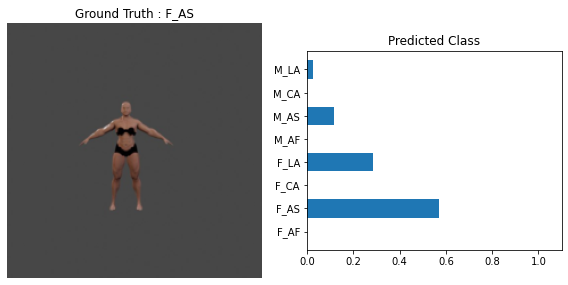

class_name = ['F_AF', 'F_AS', 'F_CA', 'F_LA', 'M_AF', 'M_AS', 'M_CA', 'M_LA']

idx_to_class = {i: j for i, j in enumerate(class_name)}

class_to_idx = {value: key for key, value in idx_to_class.items()}

test_transform = T.Compose([

#T.Resize(size=(224,224)), # Resizing the image to be 224 by 224

#T.RandomRotation(degrees=(-20,+20)), #NO need for validation

T.ToTensor(), #converting the dimension from (height,weight,channel) to (channel,height,weight) convention of PyTorch

T.Normalize([0.485,0.456,0.406],[0.229,0.224,0.225]) # Normalize by 3 means 3 StD's of the image net, 3 channels

])

#Load model

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = build_model()

model.load_state_dict(torch.load(

'CharacterClass_effnet_SGD.pt', map_location='cpu'))

model.eval()

model.to(device)

def read_image(payload):

stream=BytesIO(payload)

image=np.asarray(bytearray(stream.read()),dtype="uint8")

image=cv2.imdecode(image,cv2.IMREAD_COLOR)

if isinstance(image,np.ndarray):

img=Image.fromarray(image)

return img

def classify(payload):

img=read_image(payload)

img=test_transform(img)

with torch.no_grad():

ps=model(img.unsqueeze(0))

ps=F.softmax(ps,dim=1)

topk,topclass=ps.topk(1,dim=1)

x=topclass.view(-1).cpu().view()

return idx_to_class[x[0]]

@app.get("/")

def get():

return "Welcome to classification server."

@app.post("/classify/")

async def classify_image(file:UploadFile=File(...)):

#return "File Uploaded."

image_byte=await file.read()

return classify(image_byte)

Although,I am not able to upload image to server,when I use curl -F “file=@model_2_0.jpg” 127.0.0.1:9999/classify to pass an image

I end up recieving internal server error.Which is because of the below line

x=topclass.view(-1).cpu().view()

This is because the model is unable to read the image and hence prediction comes out to be {}.

Any help in what steps i can take to correct this code so that I can get the correct predictions will be really appreciated.

Side note,this is my first time working with deployment side,and fastapi. So basically i am a noob who is completely confused with all the server-client terms and API terms.