I have created network to classify emotions of the people using CAER dataset.

For the model I am using resnet architecture (not the pretrained one) and trying to save feature map for the last convolutional layer of last block.

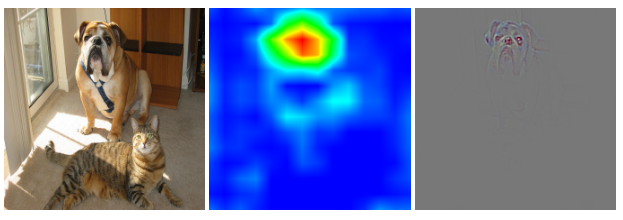

I am able to save the maps but when I try to view them its quite difficult to interpret not sure whether this is expected or I am doing something wrong in either capturing or saving them.

Below is the code and feature maps.

Visualize code

writer = SummaryWriter('runs/caer')

class LayerActivations():

features=None

def __init__(self,model,layer_num):

self.hook = model.layer4[layer_num].conv2.register_forward_hook(self.hook_fn)

def hook_fn(self,module,input,output):

self.features = output.detach()

def remove(self):

self.hook.remove()

def imshow_image(image):

mean = torch.tensor([0.370, 0.225, 0.189])

std = torch.tensor([0.172, 0.130, 0.122])

for img,m,s in zip(image,mean,std):

img.mul_(s).add_(m)

writer.add_image('Image_for_activation',img)

writer.close()

def load_checkpoint(filepath):

checkpoint = torch.load(filepath)

model = checkpoint['model']

model.load_state_dict(checkpoint['state_dict'])

model.eval()

return model

def show_activation(train_loader,dev):

dataiter = iter(train_loader)

images,labels = dataiter.next()

imshow_image(images[0:2])

model = load_checkpoint('caer_model.pth')

features = model.module

conv_out = LayerActivations(features,1)

out_pred = model(images[0:2].to(dev))

#conv_out.remove()

act = conv_out.features

act = torch.nn.Upsample(size=(224,224),mode='bilinear')(act)

for i in range(512):

filename = './features/feature_'+str(i)+'.jpg'

img = act[0][i]

torchvision.utils.save_image(img, filename, nrow=8 )

Feature maps:-

Note:- I am able to post only one feature map.