Hello.

I’m trying to train a model in which a merge outputs from three different branches, however after one iteration every classification loss is the same, as if the gradients are not being updated.

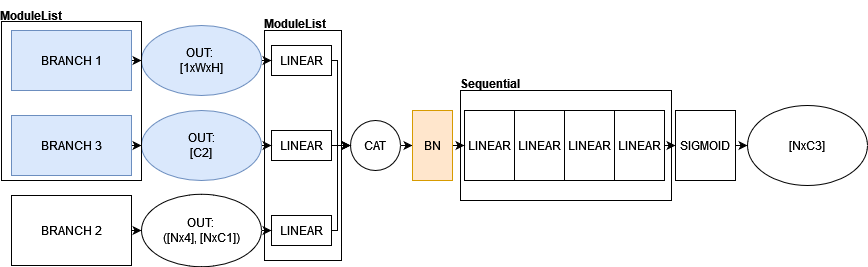

Below is a general idea of what I’ve made:

Blue branches are frozen, the non-frozen branch is a retinanet. The outputs from each branch is processed in a Linear layer to standardize the number of channels, then I concatenate each output and pass them through a linear head to get my desired output.

When I say “concatenate”, I mean Tensor.repeat() and Tensor.reshape where necessary so I get same shape for each output. The regression output from the retinanet is learning correctly, only the classification loss is never changed. Any help will be appreciated.

P.S.: BN is highlighted because I’ve tried with and without it, with no change to loss.