I am not able you completely run on GPU or Run only on GPU

I am Building a own classifier model and the code is running on both GPU and CPU together

import os

import numpy as np

import torch

import glob

import torch.nn as nn

import torchvision

from torchvision.transforms import transforms

from torch.utils.data import DataLoader

import torch.optim as optim

from torch.autograd import Variable

import torchvision

import pathlib

import os, os.path

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print('Using device',device)

> Using device cuda

transformer=transforms.Compose([

transforms.Resize((256,256)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],

[0.229, 0.224, 0.225])

])

train_path = 'D:/Anaconda Environment/Object Detection Pytorch/Classification/data4/train'

test_path = 'D:/Anaconda Environment/Object Detection Pytorch/Classification/data4/val'

train_loader = DataLoader(

torchvision.datasets.ImageFolder(train_path, transform=transformer),

batch_size=16, shuffle=True

)

test_loader = DataLoader(

torchvision.datasets.ImageFolder(train_path, transform=transformer),

batch_size=16, shuffle=True

)

root=pathlib.Path(train_path)

classes=sorted([j.name.split('/')[-1] for j in root.iterdir()])

print(classes)

class Net(nn.Module):

def __init__(self,num_classes):

super(Net,self).__init__()

#Input Image = (64,3,256,256)

self.conv1=nn.Conv2d(in_channels=3,out_channels=12,kernel_size=3,stride=1,padding=1)

#output(64,12,256,256)

self.bn1=nn.BatchNorm2d(num_features=12)

#output = (64,12,256,256)

self.relu1 = nn.ReLU()

#Output = (64,12,256,256)

self.pool=nn.MaxPool2d(kernel_size=2)

#Output = (64,12,128,128)

self.conv2=nn.Conv2d(in_channels=12,out_channels=24,kernel_size=3,stride=1,padding=1)

#output(64,24,128,128)

#output = (64,24,256,256)

self.relu2 = nn.ReLU()

self.conv3=nn.Conv2d(in_channels=24,out_channels=48,kernel_size=3,stride=1,padding=1)

#output(64,12,256,256)

self.bn2=nn.BatchNorm2d(num_features=48)

#output = (64,12,256,256)

self.relu3 = nn.ReLU()

self.pool=nn.MaxPool2d(kernel_size=2)

#Output = (64,36,64,64)

self.conv4=nn.Conv2d(in_channels=48,out_channels=96,kernel_size=3,stride=1,padding=1)

#output(64,12,256,256)

self.bn3=nn.BatchNorm2d(num_features=96)

#output = (64,12,256,256)

self.relu4 = nn.ReLU()

self.fc=nn.Linear(in_features =32*32*96,out_features=num_classes)

def forward(self,input):

output=self.conv1(input)

output=self.bn1(output)

output=self.relu1(output)

output=self.pool(output)

output=self.conv2(output)

output=self.relu2(output)

output=self.pool(output)

output=self.conv3(output)

output=self.bn2(output)

output=self.relu3(output)

output=self.conv4(output)

output=self.bn3(output)

output=self.relu4(output)

output=self.pool(output)

output=output.view(-1,32*32*96)

output=self.fc(output)

return output

model = Net(num_classes=7).to(device)

#Optimizer and Loss Function

optimizer = optim.SGD(model.parameters(),lr=0.001, weight_decay=0.001)

loss_function=nn.CrossEntropyLoss()

num_epochs = 25

#calculating the size of training and testing images

train_count=len(glob.glob(train_path+'/**/*.jpg'))

test_count=len(glob.glob(test_path+'/**/*.jpg'))

#Model training and saving best model

best_accuracy=0.0

for epoch in range(num_epochs):

#Evaluation and training on training dataset

model.train()

train_accuracy=0.0

train_loss=0.0

for i, (images,labels) in enumerate(train_loader):

if torch.cuda.is_available():

images=Variable(images.cuda())

labels=Variable(labels.cuda())

optimizer.zero_grad()

outputs=model(images)

loss=loss_function(outputs,labels)

loss.backward()

optimizer.step()

train_loss+= loss.cpu().data*images.size(0)

_,prediction=torch.max(outputs.data,1)

train_accuracy+=int(torch.sum(prediction==labels.data))

train_accuracy=train_accuracy/train_count

train_loss=train_loss/train_count

# Evaluation on testing dataset

model.eval()

test_accuracy=0.0

for i, (images,labels) in enumerate(test_loader):

if torch.cuda.is_available():

images=Variable(images.cuda())

labels=Variable(labels.cuda())

outputs=model(images)

_,prediction=torch.max(outputs.data,1)

test_accuracy+=int(torch.sum(prediction==labels.data))

test_accuracy=test_accuracy/test_count

print('Epoch: '+str(epoch)+' Train Loss: '+str(train_loss)+' Train Accuracy: '+str(train_accuracy)+' Test Accuracy: '+str(test_accuracy))

#Save the best model

if test_accuracy>best_accuracy:

torch.save(model.state_dict(),'best_checkpoint.model')

best_accuracy=test_accuracy

This is the code that i am working on

can anyone help me with these

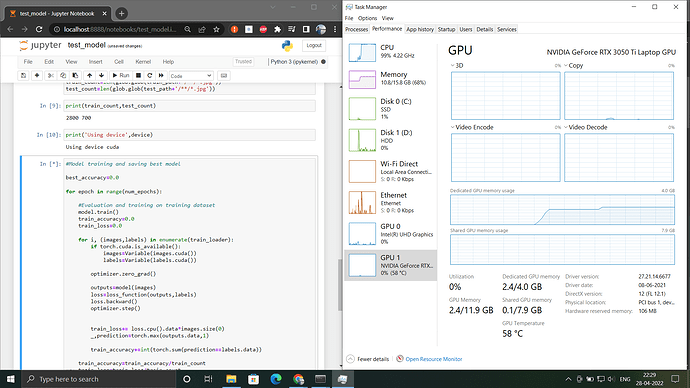

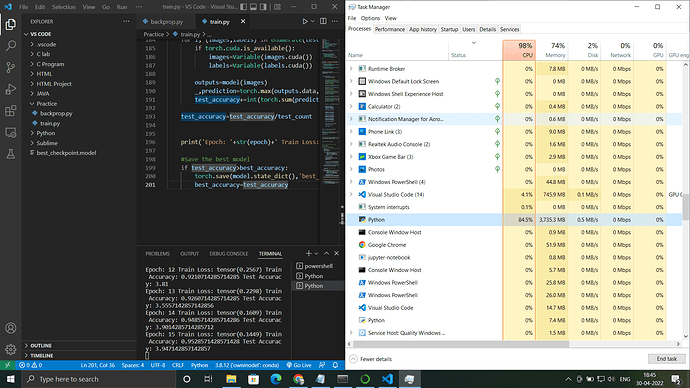

You can see in the screen shot that both my CPU and GPU are getting utilized in the execution of the program.