Hey,

I’m working with Pytorch v0.4.1 and was working with some toy example for Dropout and came across this behaviour which I could not explain:

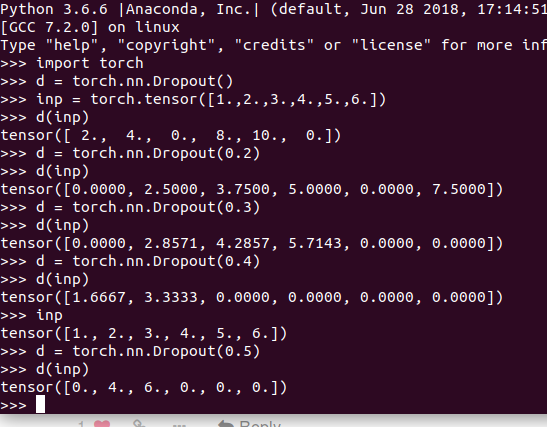

>>> d = torch.nn.Dropout()

>>> inp = torch.tensor([1.,2.,3.,4.,5.,6.])

>>> d(inp)

tensor([ 2., 4., 0., 0., 0., 12.])

Shouldn’t Dropout() simply (and only) zero out 50% of the tensor values? Or am I misreading something? Why are the remaining tensor values getting doubled?

Thanks for the help!

3 Likes

@Nilesh_Pandey I’m unsure what this reply is supposed to mean.

1 Like

Since dropout has different behavior during training and test, you have to scale the activations sometime.

Imagine a very simple model with two linear layers of size 10 and 1, respectively.

If you don’t use dropout, and all activations are approx. 1, your expected value in the output layer would be 10.

Now using dropout with p=0.5, we will lose half of these activations, so that during training our expected value would be 5. As we deactivate dropout during test, the values will have the original expected value of 10 again and the model will most likely output a lot of garbage.

One way to tackle this issue is to scale down the activations during test simply by multiplying with p.

Since we prefer to have as little work as possible during test, we can also scale the activations during training with 1/p, which is exactly what you observe.

21 Likes

Interesting.

Thanks a lot for the reply. It does make sense.

Is this behaviour documented somewhere in the docs? If not, should it be? Or is it the general behaviour of dropout itself?

If needed, I can help with that.

It’s the general behavior of dropout and mentioned in the paper.

Maybe a short notice on the scaling might help with the understanding.

1 Like