Hi everyone,

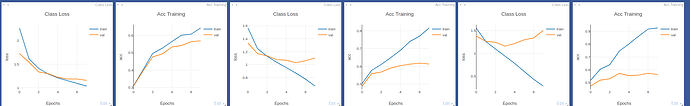

I’m new to PyTorch and CNN. I’m trying to figure out which is the effect of the learning rate during the training phase on my net. I trained the same model for 8 epochs, initialized in the same way, using three different lr: 0.01, 0.001, 0.0001. I used Adam as optimizer without changing other parameters (I also tried sgd + momentum but it is even worse). I obtained the following results using a batch size of 32 (the first pair is concerned 0.01, the second one 0.001 and finally 0.0001):

I’m not using any regularization, so the model is clearly overfitting, but anyway the results seem too strange. In particular, the lowest learning rate goes down too fast and overfit more, which I would expect from a higher learning rate.

Adding a weight decay of 0.0001 I obtained similar results. I know I should try other regularization techniques, such as dropout or increasing weight decay, but I’m thiniking there’s an implementation problem. Can you help me to understand these results?

I’m working with FER 2013 dataset (images 48x48x1): https://www.kaggle.com/c/challenges-in-representation-learning-facial-expression-recognition-challenge/data

This is my net:

Net(

(features): Sequential(

(0): Conv2d(1, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(4): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(5): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(6): ReLU(inplace=True)

(7): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(9): ReLU(inplace=True)

(10): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(11): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(12): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(13): ReLU(inplace=True)

(14): Conv2d(256, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(16): ReLU(inplace=True)

(17): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(classifier): Sequential(

(0): Linear(in_features=2304, out_features=512, bias=True)

(1): ReLU(inplace=True)

(2): Linear(in_features=512, out_features=7, bias=True)

)

)

I initialized an offline model in this way and saved its state, then I loaded it into the three models before training

def initialize_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d) | isinstance(m, nn.Linear):

nn.init.xavier_normal_(m.weight)

if m.bias is not None:

nn.init.constant_(m.bias, 0)

if isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

These are the methods for fit and evaluate (I used nn.CrossEntropyLoss() as criterion):

def fit(self):

for epoch in range(self.args.max_epochs):

self.net.train()

training_loss = 0.0

for i, data in enumerate(self.train_loader, 0):

images, labels = data

images = images.to(self.device)

labels = labels.to(self.device)

self.optimizer.zero_grad()

output = self.net(images)

loss = self.criterion(output, labels)

loss.backward()

self.optimizer.step()

training_loss += loss.item() * images.size(0)

epoch_training_loss = training_loss / len(self.train_data)

epoch_validation_loss = self.get_validation_loss()

train_accuracy = self.evaluate(self.train_data)

val_accuracy = self.evaluate(self.val_data)

def evaluate(self, data):

loader = DataLoader(data, batch_size=self.args.bs, num_workers=1, shuffle=False)

self.net.eval()

num_correct, num_total = 0, 0

with torch.no_grad():

for inputs in loader:

images = inputs[0].to(self.device)

labels = inputs[1].to(self.device)

outputs = self.net(images)

_, preds = torch.max(outputs.detach(), 1)

num_correct += (preds == labels).sum().item()

num_total += labels.size(0)

return num_correct / num_total

def get_validation_loss(self):

self.net.eval()

validation_loss = 0

for i, data in enumerate(self.val_loader, 0):

images, labels = data

images = images.to(self.device)

labels = labels.to(self.device)

output = self.net(images)

loss = self.criterion(output, labels)

validation_loss += loss.item() * images.size(0)

return validation_loss / len(self.val_data)

Thank you all!