Hello all,

I’m new to BERT, and I’m trying to wrap my head around its structure.

from transformers import BertTokenizer, BertModel

model = BertModel.from_pretrained('bert-base-uncased')

named_params = list(model.named_parameters())

print(f"{len(named_params)} Paramaters \n\n\n")

for p in named_params:

print("{:<55} {:>12}".format(p[0],str(tuple(p[1].size()))))

The output of this is:

199 Paramaters

embeddings.word_embeddings.weight (30522, 768)

embeddings.position_embeddings.weight (512, 768)

embeddings.token_type_embeddings.weight (2, 768)

embeddings.LayerNorm.weight (768,)

embeddings.LayerNorm.bias (768,)

encoder.layer.0.attention.self.query.weight (768, 768)

encoder.layer.0.attention.self.query.bias (768,)

encoder.layer.0.attention.self.key.weight (768, 768)

encoder.layer.0.attention.self.key.bias (768,)

encoder.layer.0.attention.self.value.weight (768, 768)

encoder.layer.0.attention.self.value.bias (768,)

encoder.layer.0.attention.output.dense.weight (768, 768)

encoder.layer.0.attention.output.dense.bias (768,)

encoder.layer.0.attention.output.LayerNorm.weight (768,)

encoder.layer.0.attention.output.LayerNorm.bias (768,)

encoder.layer.0.intermediate.dense.weight (3072, 768)

encoder.layer.0.intermediate.dense.bias (3072,)

encoder.layer.0.output.dense.weight (768, 3072)

encoder.layer.0.output.dense.bias (768,)

encoder.layer.0.output.LayerNorm.weight (768,)

encoder.layer.0.output.LayerNorm.bias (768,)

…

encoder.layer.11.attention.self.query.weight (768, 768)

encoder.layer.11.attention.self.query.bias (768,)

encoder.layer.11.attention.self.key.weight (768, 768)

encoder.layer.11.attention.self.key.bias (768,)

encoder.layer.11.attention.self.value.weight (768, 768)

encoder.layer.11.attention.self.value.bias (768,)

encoder.layer.11.attention.output.dense.weight (768, 768)

encoder.layer.11.attention.output.dense.bias (768,)

encoder.layer.11.attention.output.LayerNorm.weight (768,)

encoder.layer.11.attention.output.LayerNorm.bias (768,)

encoder.layer.11.intermediate.dense.weight (3072, 768)

encoder.layer.11.intermediate.dense.bias (3072,)

encoder.layer.11.output.dense.weight (768, 3072)

encoder.layer.11.output.dense.bias (768,)

encoder.layer.11.output.LayerNorm.weight (768,)

encoder.layer.11.output.LayerNorm.bias (768,)

pooler.dense.weight (768, 768)

pooler.dense.bias (768,)

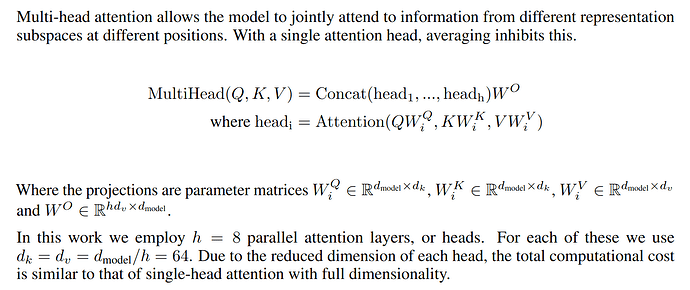

I understand that there are 12 layers (encoder stacks) each containing 12 attention heads, however, I can see that for each layer there’s a single Wq matrix, Wv matrix, and Wk matrix.

Shouldn’t there be 12 of them? What I’m getting wrong here?