First of all, Thx for the replying!

If window size(K) is 1, I would feed first layer sequentialy from timestamp 0 to 99.

And I am trying to build LSTM autoencoder(reconstruction problem), so that output length should be same as input length.

In other words, if I choose window size 1, the output length is 1. If I choose window size K, output length should be K. It’s like many input, many output.

Also, I don’t like to pass all data in at once. (my real data is over a million time series)

And I’d like to know what if I make overlapping windows. (like stride=1 sliding window)

FYI, The reason why I ask about this question is that I tried to conduct the code which is the answer of the link in my original question.

The link shows LSTM autoencoder with feature=1, batch size=1, and sequence length=5.

I only changed the feature=3. Here is the code I tested.

import torch

import torch.nn as nn

import torch.optim as optim

class LSTM(nn.Module):

# input_dim has to be size after flattening

# For 20x20 single input it would be 400

def __init__(

self,

input_dimensionality: int,

input_dim: int,

latent_dim: int,

num_layers: int,

):

super(LSTM, self).__init__()

self.input_dimensionality: int = input_dimensionality

self.input_dim: int = input_dim # It is 1d, remember

self.latent_dim: int = latent_dim

self.num_layers: int = num_layers

self.encoder = torch.nn.LSTM(self.input_dim, self.latent_dim, self.num_layers)

# You can have any latent dim you want, just output has to be exact same size as input

# In this case, only encoder and decoder, it has to be input_dim though

self.decoder = torch.nn.LSTM(self.latent_dim, self.input_dim, self.num_layers)

def forward(self, input):

# Save original size first:

original_shape = input.shape

# Flatten 2d (or 3d or however many you specified in constructor)

input = input.reshape(input.shape[: -self.input_dimensionality] + (-1,))

# Rest goes as in my previous answer

_, (last_hidden, _) = self.encoder(input)

print(last_hidden.size())

encoded = last_hidden.repeat(input.shape[0], 1, 1)

print(encoded.size())

y, _ = self.decoder(encoded)

print(y.size())

# You have to reshape output to what the original was

reshaped_y = y.reshape(original_shape)

print(torch.squeeze(reshaped_y).size())

return torch.squeeze(reshaped_y)

model = LSTM(input_dimensionality= 1, input_dim=3, latent_dim=20, num_layers=1)

loss_function = nn.MSELoss()

optimizer = optim.Adam(model.parameters())

y = torch.rand(4, 3)

x = y.view(len(y), 1, -1)

print(y)

print(x)

while True:

y_pred = model(x)

optimizer.zero_grad()

loss = loss_function(y_pred, y)

loss.backward()

optimizer.step()

print(y_pred)

print(y)

tensor([[0.3396, 0.4584, 0.4990],

[0.3473, 0.6069, 0.3537],

[0.0018, 0.9779, 0.7858],

[0.5669, 0.7047, 0.7538]])

print(y_pred)

tensor([[0.3681, 0.4199, 0.5355],

[0.3092, 0.6824, 0.3382],

[0.0140, 0.8855, 0.7361],

[0.5692, 0.7194, 0.7820]], grad_fn=<SqueezeBackward0>)

You can see the results are pretty good after several epochs.

Plus, I changed batch size=2 from the above code.(input shape = (4, 2, 3)).

The results are also good.

print(y)

tensor([[[0.1876, 0.2843, 0.4372],

[0.2946, 0.3854, 0.6544]],

[[0.7341, 0.2434, 0.2316],

[0.9146, 0.9394, 0.1762]],

[[0.7286, 0.0147, 0.5048],

[0.0909, 0.4264, 0.3136]],

[[0.3353, 0.7569, 0.5305],

[0.2900, 0.4721, 0.9971]]])

print(y_pred)

tensor([[[0.1760, 0.1595, 0.4767],

[0.3121, 0.3768, 0.7416]],

[[0.7191, 0.0896, 0.3143],

[0.8565, 0.9248, 0.1792]],

[[0.7516, 0.2602, 0.4180],

[0.0731, 0.4455, 0.3333]],

[[0.3654, 0.6303, 0.5079],

[0.2830, 0.4681, 0.8621]]], grad_fn=<ReshapeAliasBackward0>)

The issue I had is that I don’t like the order so I changed it with using batch_first argument.

This is why I ask about the input dimensions. If I can make my data into these shape and order somehow, game is over. However, I don’t understand fully so I just changed the order.

Whenever I change the batch size > 1 and change the default order, the results tend to be the average.

class LSTM(nn.Module):

# input_dim has to be size after flattening

# For 20x20 single input it would be 400

def __init__(

self,

input_dimensionality: int,

input_dim: int,

latent_dim: int,

num_layers: int,

):

super(LSTM, self).__init__()

self.input_dimensionality: int = input_dimensionality

self.input_dim: int = input_dim # It is 1d, remember

self.latent_dim: int = latent_dim

self.num_layers: int = num_layers

self.encoder = torch.nn.LSTM(self.input_dim, self.latent_dim, self.num_layers, batch_first=True)

# You can have any latent dim you want, just output has to be exact same size as input

# In this case, only encoder and decoder, it has to be input_dim though

self.decoder = torch.nn.LSTM(self.latent_dim, self.input_dim, self.num_layers, batch_first=True)

def forward(self, input):

# Save original size first:

original_shape = input.shape

# Flatten 2d (or 3d or however many you specified in constructor)

input = input.reshape(input.shape[: -self.input_dimensionality] + (-1,))

# Rest goes as in my previous answer

_, (last_hidden, _) = self.encoder(input)

print(last_hidden.size())

encoded = last_hidden.repeat(input.shape[1], 1, 1)

print(encoded.size())

y, _ = self.decoder(encoded)

print(y.size())

# You have to reshape output to what the original was

reshaped_y = y.reshape(original_shape)

return reshaped_y

# print(torch.squeeze(reshaped_y).size())

# return torch.squeeze(reshaped_y)

model = LSTM(input_dimensionality= 1, input_dim=3, latent_dim=20, num_layers=1)

loss_function = nn.MSELoss()

optimizer = optim.Adam(model.parameters())

y = torch.rand(2, 4, 3)

x = y

print(y)

while True:

y_pred = model(x)

optimizer.zero_grad()

loss = loss_function(y_pred, y)

loss.backward()

optimizer.step()

print(y_pred)

Here is the result.

print(y)

tensor([[[0.5823, 0.5620, 0.2612],

[0.2795, 0.0694, 0.2277],

[0.5405, 0.3568, 0.6255],

[0.3243, 0.2372, 0.3427]],

[[0.7686, 0.5203, 0.9025],

[0.7947, 0.8480, 0.8990],

[0.7740, 0.1220, 0.5202],

[0.4073, 0.6483, 0.2592]]])

print(y_pred)

tensor([[[0.6665, 0.3905, 0.5777],

[0.4519, 0.4510, 0.4332],

[0.6665, 0.3905, 0.5777],

[0.4519, 0.4510, 0.4332]],

[[0.6665, 0.3905, 0.5777],

[0.4519, 0.4510, 0.4332],

[0.6665, 0.3905, 0.5777],

[0.4519, 0.4510, 0.4332]]], grad_fn=<UnsafeViewBackward0>)

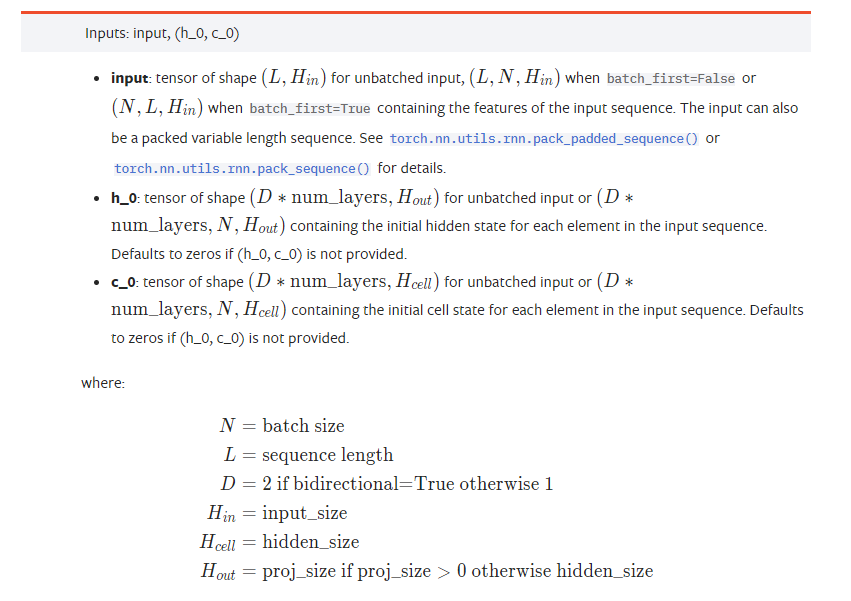

I really need to figure out how should I make my data into (sequence length, batch size, number of features) or (batch size, sequence length, number of features)

Thx again!!