Hi, I’m trying to understand the whether there’s a problem with my training procedure of transformer encoder.

So, basically I’m trying to train a transformer encoder for classification on synthetic dataset of size [batch_size, vocab_size, dim] generated on the fly where the vocab_size is of varying size.

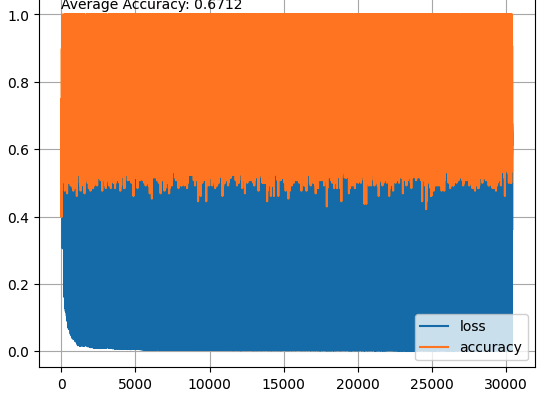

During training I’ve observed that the loss and accuracy oscillates a lot. Meaning it improves but occasionally hits a hard example then it falls and takes a lot of time to pick up again.

When I plot train and loss values I get plots like the following, since I dunno much about nlp and transformer I was wondering if this is normal or is an indication of something wrong going on?