Documentation. Precision — PyTorch-Metrics 1.3.0.post0 documentation

from torchmetrics import Precision

from torchmetrics.classification import BinaryPrecision

preds = torch.tensor([0, 0, 0, 0])

target = torch.tensor([0, 0, 0, 0])

general_precision = Precision(num_classes=2)

bin_precision = BinaryPrecision()

# both should be same?

general_precision(preds, target)

# (tensor(1.)

bin_precision(preds, target)

# tensor(0.))

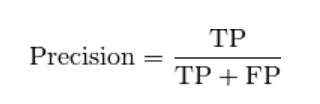

From equation, and from preds and target, as there are now True Positive, the numerator becomes 0 and so the answer should be 0. But in general precision, how does it calcuate this?

[ Same goes to (F1Score - BinaryF1Score, BinaryRecall, Recall). ]