Hello:

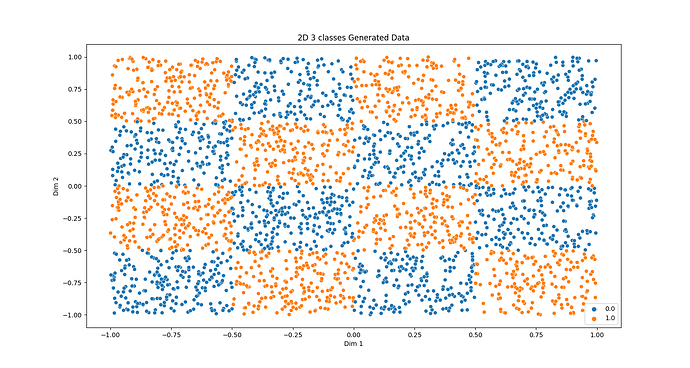

I am trying to classify a generated 2 class checkerboard data, shown below, with a simple fully connected dense network with a few hidden layers. I notice that < 25% of the GPU is being used during training. Could you please advice on what could be causing that? I have attached the code below. I also ran the code with torch.utils.bottleneck. I have attached the output of the profiler in case it is of help.

Thanks in advance!

Checkerboard Data

Code

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

from sklearn.model_selection import train_test_split

from math import sqrt, ceil

from collections import OrderedDict

import torch

import torch.cuda as cuda

import torch.nn as nn

import torch.optim as optim

if cuda.is_available():

device = torch.device("cuda:0")

print('device', device)

else:

device = torch.device("cpu")

print('device', device)

def plot_data(X, y, num_classes, save_as):

f, ax = plt.subplots(nrows=1, ncols=1,figsize=(15,8))

colors = ['tab:blue', 'tab:orange', 'tab:green', 'tab:purple'][0:num_classes]

sns.scatterplot(x=X[:,0],y=X[:,1],hue=y,palette=colors, ax=ax)

ax.set_title("2D 3 classes Generated Data")

plt.ylabel('Dim 2')

plt.xlabel('Dim 1')

plt.savefig(save_as)

#plt.show()

plt.clf()

plt.close()

def generate_data(dataset, size):

num_classes = 2

X = y = None

X = 2 * np.random.random((size,2)) - 1

def classifier2(X): # a 4x4 checkerboard pattern -- you can use the same method to make up your own checkerboard patterns

return (np.sum( np.ceil( 2 * X).astype(int), axis=1 ) % 2).astype(float)

y = classifier2( X )

plot_data(X, y, num_classes, 'figures/all/'+dataset+'_'+str(num_classes)+'_.png')

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42, stratify=y)

print(len(y_train))

print(sum(y_train))

print(len(y_test))

print(sum(y_test))

# Create trainloader

batchsize = 32

trainset = torch.utils.data.TensorDataset(torch.tensor(x_train, dtype=torch.float32),

torch.tensor(y_train, dtype=torch.long))

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batchsize,

shuffle=True, num_workers=0, pin_memory=True)

testset = torch.utils.data.TensorDataset(torch.tensor(x_test, dtype=torch.float32),

torch.tensor(y_test, dtype=torch.long))

testloader = torch.utils.data.DataLoader(testset, batch_size=len(testset),

shuffle=True, num_workers=0, pin_memory=True)

return X, y, trainset, trainloader, testset, testloader, num_classes

class single_model_deep(nn.Module):

def __init__(self, parameters, num_experts, num_classes, bias=None):

super(single_model_deep, self).__init__()

output = float(parameters)/(4*(num_experts+1)*8)

output = (sqrt(6*parameters + 9)/3 - 1)/(num_experts + 1)

output = ceil(output)

if output <= 0.0:

output = 1

print('parameters', parameters, 'num_experts', num_experts+1, 'output', output)

self.model = nn.Sequential(OrderedDict({

'linear_1':nn.Linear(2, (num_experts+1)*output),

'relu_1':nn.ReLU(),

'linear_2':nn.Linear((num_experts+1)*output, (num_experts+1)*output),

'relu_2':nn.ReLU(),

'linear_3':nn.Linear((num_experts+1)*output, (num_experts+1)*int(output/2)),

'relu_3':nn.ReLU(),

'linear_4':nn.Linear((num_experts+1)*int(output/2),num_classes),

'softmax':nn.Softmax(dim=1)

})

)

if not bias is None:

layers = dict(self.model.named_children())

with torch.no_grad():

layers['linear_1'].bias.fill_(bias)

layers['linear_2'].bias.fill_(bias)

layers['linear_3'].bias.fill_(bias)

layers['linear_4'].bias.fill_(bias)

self.model = self.model.to(device)

def forward(self, input):

return self.model(input)

def train(self, trainloader, testloader, optimizer, loss_criterion, accuracy, epochs):

history = {'loss':[], 'accuracy':[], 'val_accuracy':[]}

for epoch in range(0, epochs):

running_loss = 0.0

training_accuracy = 0.0

test_accuracy = 0.0

i = 0

for inputs, labels in trainloader:

# get the inputs; data is a list of [inputs, labels]

inputs, labels = inputs.to(device, non_blocking=True), labels.to(device, non_blocking=True)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = self(inputs)

loss = loss_criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

training_accuracy += accuracy(outputs, labels)

i+=1

j = 0

for test_input, test_labels in testloader:

test_input, test_labels = test_input.to(device), test_labels.to(device)

test_outputs = self(test_input)

test_accuracy += accuracy(test_outputs, test_labels)

j+=1

history['loss'].append(running_loss/i)

history['accuracy'].append(training_accuracy/i)

history['val_accuracy'].append(test_accuracy/j)

print('epoch: %d loss: %.3f training accuracy: %.3f val accuracy: %.3f' %

(epoch + 1, running_loss / i, training_accuracy/i, test_accuracy/j))

return history

def accuracy(out, yb):

preds = torch.argmax(out, dim=1)

return (preds == yb).float().mean()

X, y, trainset, trainloader, testset, testloader, num_classes = generate_data('checker_board-2', 3000)

num_experts = 2

num_classes = 2

model = single_model_deep(2062, num_experts, num_classes)

optimizer = optim.RMSprop(model.parameters(),

lr=0.001, momentum=0.9)

epochs = 40

hist = model.train(trainloader, testloader, optimizer, nn.CrossEntropyLoss(), accuracy, epochs=epochs)

torch.utils.bottleneck output

`bottleneck` is a tool that can be used as an initial step for debugging

bottlenecks in your program.

It summarizes runs of your script with the Python profiler and PyTorch's

autograd profiler. Because your script will be profiled, please ensure that it

exits in a finite amount of time.

For more complicated uses of the profilers, please see

https://docs.python.org/3/library/profile.html and

https://pytorch.org/docs/master/autograd.html#profiler for more information.

Running environment analysis...

Running your script with cProfile

device cuda:0

--------------------------------------------------------------------------------

Environment Summary

--------------------------------------------------------------------------------

PyTorch 1.6.0 compiled w/ CUDA 10.2

Running with Python 3.8 and

`pip3 list` truncated output:

numpy==1.19.1

torch==1.6.0

torchvision==0.7.0

--------------------------------------------------------------------------------

cProfile output

--------------------------------------------------------------------------------

3731069 function calls (3669088 primitive calls) in 8.131 seconds

Ordered by: internal time

List reduced from 6411 to 15 due to restriction <15>

ncalls tottime percall cumtime percall filename:lineno(function)

6088 2.146 0.000 2.146 0.000 {method 'to' of 'torch._C._TensorBase' objects}

3000 1.026 0.000 1.026 0.000 {method 'run_backward' of 'torch._C._EngineBase' objects}

12160 0.570 0.000 0.570 0.000 {built-in method addmm}

48000 0.330 0.000 0.330 0.000 {method 'mul_' of 'torch._C._TensorBase' objects}

48000 0.290 0.000 0.290 0.000 {method 'add_' of 'torch._C._TensorBase' objects}

360000 0.239 0.000 0.239 0.000 /home/local/peac004/anaconda3/envs/pytorch1.6.0/lib/python3.8/site-packages/torch/utils/data/dataset.py:162(<genexpr>)

6080 0.166 0.000 0.166 0.000 {built-in method stack}

24000 0.163 0.000 0.163 0.000 {method 'sqrt' of 'torch._C._TensorBase' objects}

3000 0.144 0.000 1.229 0.000 /home/local/peac004/anaconda3/envs/pytorch1.6.0/lib/python3.8/site-packages/torch/optim/rmsprop.py:55(step)

790 0.140 0.000 0.140 0.000 {method 'read' of '_io.BufferedReader' objects}

24000 0.135 0.000 0.135 0.000 {method 'addcmul_' of 'torch._C._TensorBase' objects}

24000 0.115 0.000 0.115 0.000 {method 'addcdiv_' of 'torch._C._TensorBase' objects}

33400/6040 0.101 0.000 1.164 0.000 /home/local/peac004/anaconda3/envs/pytorch1.6.0/lib/python3.8/site-packages/torch/nn/modules/module.py:710(_call_impl)

9120 0.096 0.000 0.096 0.000 {built-in method relu}

23992 0.093 0.000 0.093 0.000 {method 'zero_' of 'torch._C._TensorBase' objects}

--------------------------------------------------------------------------------

autograd profiler output (CPU mode)

--------------------------------------------------------------------------------

top 15 events sorted by cpu_time_total

----------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- ---------------------------------------------

Name Self CPU total % Self CPU total CPU total % CPU total CPU time avg CUDA total % CUDA total CUDA time avg Number of Calls Input Shapes

----------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- ---------------------------------------------

stack 15.90% 194.232ms 15.90% 194.232ms 194.232ms NaN 0.000us 0.000us 1 []

cat 15.89% 194.190ms 15.89% 194.190ms 194.190ms NaN 0.000us 0.000us 1 []

_cat 15.89% 194.187ms 15.89% 194.187ms 194.187ms NaN 0.000us 0.000us 1 []

stack 7.98% 97.506ms 7.98% 97.506ms 97.506ms NaN 0.000us 0.000us 1 []

cat 7.98% 97.463ms 7.98% 97.463ms 97.463ms NaN 0.000us 0.000us 1 []

_cat 7.98% 97.459ms 7.98% 97.459ms 97.459ms NaN 0.000us 0.000us 1 []

TBackward 4.04% 49.404ms 4.04% 49.404ms 49.404ms NaN 0.000us 0.000us 1 []

t 4.04% 49.401ms 4.04% 49.401ms 49.401ms NaN 0.000us 0.000us 1 []

stack 4.03% 49.291ms 4.03% 49.291ms 49.291ms NaN 0.000us 0.000us 1 []

cat 4.03% 49.250ms 4.03% 49.250ms 49.250ms NaN 0.000us 0.000us 1 []

_cat 4.03% 49.247ms 4.03% 49.247ms 49.247ms NaN 0.000us 0.000us 1 []

stack 2.16% 26.343ms 2.16% 26.343ms 26.343ms NaN 0.000us 0.000us 1 []

unsqueeze 2.02% 24.658ms 2.02% 24.658ms 24.658ms NaN 0.000us 0.000us 1 []

as_strided 2.02% 24.644ms 2.02% 24.644ms 24.644ms NaN 0.000us 0.000us 1 []

AddmmBackward 2.02% 24.634ms 2.02% 24.634ms 24.634ms NaN 0.000us 0.000us 1 []

----------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- ---------------------------------------------

Self CPU time total: 1.222s

CUDA time total: 0.000us

--------------------------------------------------------------------------------

autograd profiler output (CUDA mode)

--------------------------------------------------------------------------------

top 15 events sorted by cpu_time_total

Because the autograd profiler uses the CUDA event API,

the CUDA time column reports approximately max(cuda_time, cpu_time).

Please ignore this output if your code does not use CUDA.

----------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- ---------------------------------------------

Name Self CPU total % Self CPU total CPU total % CPU total CPU time avg CUDA total % CUDA total CUDA time avg Number of Calls Input Shapes

----------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- ---------------------------------------------

stack 16.39% 200.653ms 16.39% 200.653ms 200.653ms 16.44% 200.594ms 200.594ms 1 []

cat 16.37% 200.442ms 16.37% 200.442ms 200.442ms 16.41% 200.278ms 200.278ms 1 []

_cat 16.37% 200.435ms 16.37% 200.435ms 200.435ms 16.41% 200.273ms 200.273ms 1 []

stack 7.81% 95.617ms 7.81% 95.617ms 95.617ms 7.82% 95.463ms 95.463ms 1 []

cat 7.79% 95.413ms 7.79% 95.413ms 95.413ms 7.80% 95.138ms 95.138ms 1 []

_cat 7.79% 95.406ms 7.79% 95.406ms 95.406ms 7.80% 95.133ms 95.133ms 1 []

TBackward 4.09% 50.112ms 4.09% 50.112ms 50.112ms 4.11% 50.112ms 50.112ms 1 []

t 4.09% 50.105ms 4.09% 50.105ms 50.105ms 4.11% 50.106ms 50.106ms 1 []

stack 3.30% 40.384ms 3.30% 40.384ms 40.384ms 3.30% 40.224ms 40.224ms 1 []

cat 3.28% 40.199ms 3.28% 40.199ms 40.199ms 3.27% 39.907ms 39.907ms 1 []

_cat 3.28% 40.194ms 3.28% 40.194ms 40.194ms 3.27% 39.902ms 39.902ms 1 []

stack 3.08% 37.701ms 3.08% 37.701ms 37.701ms 3.09% 37.747ms 37.747ms 1 []

stack 2.18% 26.649ms 2.18% 26.649ms 26.649ms 2.00% 24.450ms 24.450ms 1 []

AddmmBackward 2.08% 25.521ms 2.08% 25.521ms 25.521ms 2.09% 25.521ms 25.521ms 1 []

mm 2.08% 25.423ms 2.08% 25.423ms 25.423ms 2.08% 25.410ms 25.410ms 1 []

----------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- --------------- ---------------------------------------------

Self CPU time total: 1.224s

CUDA time total: 1.220s