Hi Alessandro!

10 epochs of training does not sound like a lot to me. (As always, this

will depend on the details of your use case.)

Networks for reasonably complicated image-processing tasks are often

trained on hundreds or thousands (or more!) epochs.

I would suggest training on a training dataset, and then tracking the

performance on a separate validation dataset. You would certainly

want to track the loss on both datasets, and you could also look at

other performance metrics (such as accuracy).

People most commonly compute the loss (and other metrics) for both

datasets after each epoch.

As long as your validation-dataset loss is still going down, your network

is still learning features, etc., relevant to your real problem. This is true

even if your validation-dataset loss is significantly higher than your

training-dataset loss – what matters is that the validation-dataset loss

is still going down.

(If your validation-dataset loss starts going up, even though your

training-dataset loss is still going down, you have likely started to

“overfit” and further training, without changing something else about

what you are doing, won’t help – and will likely degrade – your

real-world performance.)

You could argue this either way:

My intuition tells me that if all you want to do is distinguish “foreground”

from “background” (i.e., all non-background classes are collapsed into

a single foreground class), then it will be easier to train with one single

foreground class, and you will get better performance (on this simplified

problem). The idea is that this problem easier and that your network

won’t be “wasting effort” on details that aren’t relevant (to your simplified

performance metric).

On the other hand, if you collapse all of your non-background classes

into a single foreground class, you would be “hiding” information from

the network. Perhaps the information in differences between those classes

helps the network better learn the most important features, even for

merely distinguishing foreground from background. So maybe the network

will train better if you keep the multiple foreground classes separate.

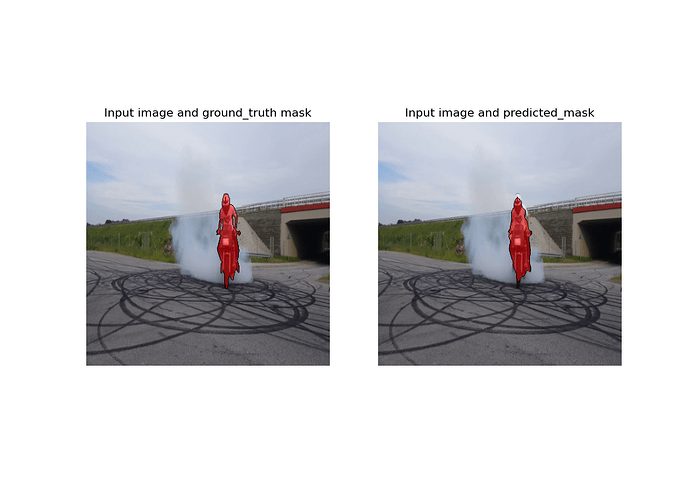

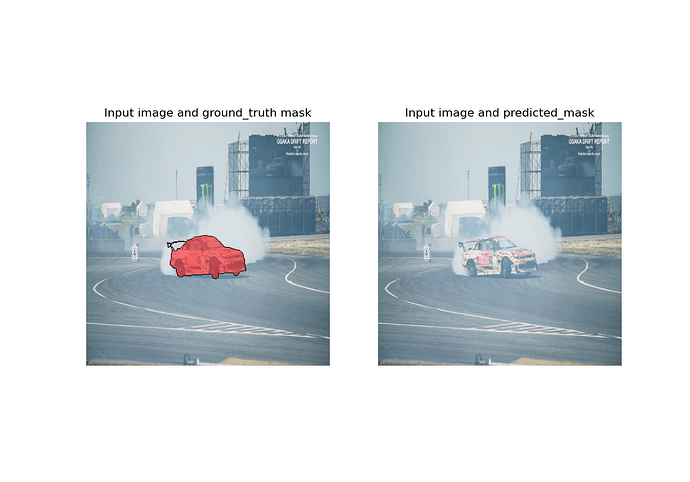

Well, yes, a bug could always be the cause of your issues, so it’s always

worthwhile double-checking your code. Having said that, although I can’t

rule out a bug, I don’t see obvious symptoms of a bug in the results you’ve

posted.

Best.

K. Frank