Hi all ![]()

My project is a Grammar correction based on multi-head attention model. I have two datasets the first one is synthetic data in 3 GB and the second on the original data in 200 MB. The training strategy as:

-

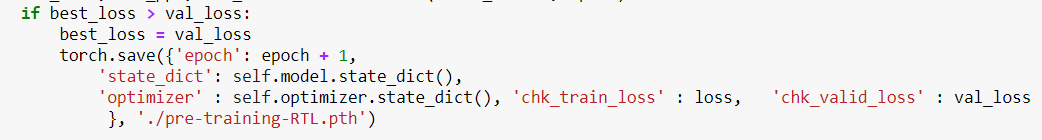

Load the pre-training data and start training to save the best version.

-

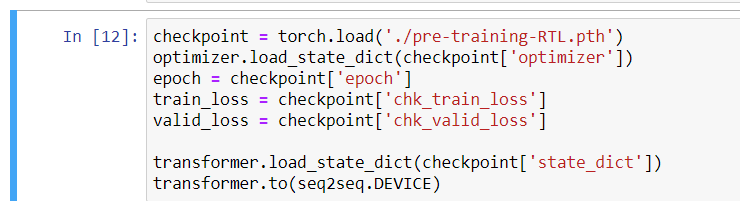

Change the learning rate and the number of epochs, load the pre-training model and continue training the model with the original dataset.

I have a confusing problem related to the results when training the model based on pre-training model, which I get unexpected results (below score) compare with the same model that trained from scratch without pre-training. I have no explanation for this problem taking into account the different sizes between both datasets.

Any suggestion to overcome this issue?

Kind regards,

Aiman Solyman