I am using this model:

I should train the model to recognize a series of (volcanic) videos which can belong to 8 different classes (all or only some of them) For example:

-video 1 belongs to classes 1,4,6

-video 2 belongs to class 3

-video 3 belongs to classes 2,5

etc…

Each class has a binary label 1 (belongs) or 0 (does not belong).

I thought of calculating the loss for each of these 8 classes by specifying in each of these the weight of that specific class.

This is my class_weight = [5.56, 51.5, 3.67, 1.39, 5.36, 2.82, 5.18, 1.76]

def binary_loss_fn(outputs, targets):

t1, t2, t3, t4, t5, t6, t7, t8 = targets

o1, o2, o3, o4, o5, o6, o7, o8 = outputs

l1 = nn.BCEWithLogitsLoss(pos_weight=class_weight[0], reduction='none')(o1, t1)

l2 = nn.BCEWithLogitsLoss(pos_weight=class_weight[1], reduction='none')(o2, t2)

l3 = nn.BCEWithLogitsLoss(pos_weight=class_weight[2], reduction='none')(o3, t3)

l4 = nn.BCEWithLogitsLoss(pos_weight=class_weight[3], reduction='none')(o4, t4)

l5 = nn.BCEWithLogitsLoss(pos_weight=class_weight[4], reduction='none')(o5, t5)

l6 = nn.BCEWithLogitsLoss(pos_weight=class_weight[5], reduction='none')(o6, t6)

l7 = nn.BCEWithLogitsLoss(pos_weight=class_weight[6], reduction='none')(o7, t7)

l8 = nn.BCEWithLogitsLoss(pos_weight=class_weight[7], reduction='none')(o8, t8)

return (l1 + l2 + l3 + l4 + l5 + l6 + l7 + l8) / 8

My dataset is structured as follows:

210 videos of 300 frames each, of these I took one every five frames and combined in 29 different combinations each of 32 frames:

frames 1,2,3,4,5,6 … 300 → frames 1,6,11,16,21 … (total 60 frames):

- first combination: first 32 frames (1-32)

- second combination: second 32 frames shifted by 1 (2-33)

- third combination: third 32 frames shifted by 1 (3-34)

etc…

total array 210x29x32

The input of the s3d model are these 32 frames concatenated with pytorch and resized:

torch.Size ([2, 3, 32, 224, 224])

associated with a list of 8 targets each relating to a class (1 or 0)

def fit(model, train_dataloader):

print('Training')

model.train()

train_running_loss = 0.0

train_running_correct = 0

for i, data in tqdm(enumerate(train_dataloader), total=int(len(train_data)/train_dataloader.batch_size)):

#data, target, path = data[0].to(device), data[1].to(device), data[2]

# extract the features and labels

video = data['video'].to(device)

target1 = data['label1'].to(device)

target2 = data['label2'].to(device)

target3 = data['label3'].to(device)

target4 = data['label4'].to(device)

target5 = data['label5'].to(device)

target6 = data['label6'].to(device)

target7 = data['label7'].to(device)

target8 = data['label8'].to(device)

path = data['path']

optimizer.zero_grad()

outputs = model(video)

targets = (target1, target2, target3, target4, target5, target6, target7, target8)

outputs = torch.sigmoid(outputs)

loss = binary_loss_fn(outputs, targets)

train_running_loss += loss.item()

preds = torch.tensor([[1 if i >= 0.5 else 0 for i in j] for j in outputs]).to(device)

train_running_correct = (preds == target).sum().item()

loss.backward()

optimizer.step()

train_loss = train_running_loss/len(train_dataloader.dataset)

train_accuracy = 100. * train_running_correct/len(train_dataloader.dataset)

return train_loss, train_accuracy

This is my fit function where I am going to pass my targets and my model’s output inside binary_loss_fn. I specified the number of classes when I initialize the model

model = S3D (8) .to (device)

But i can’t understand what kind of output I get on these s3d because i can’t calculate individually my 8 loss functions.

This is a type model’s output with batch equal to 2:

torch.tensor ([

[0.4150, 0.6833, 0.4165, 0.4718, 0.4183, 0.5799, 0.4773, 0.3026],

[0.4923, 0.5274, 0.4894, 0.5042, 0.4440, 0.4746, 0.4580, 0.4449]

])

If it can be useful this is my dataset function

class ImageDataset(Dataset):

def __init__(self, images, labels=None):

self.X = images

self.y = labels

def __len__(self):

rows = len(self.X)

columns = len(self.X[0])

total_length = rows * columns

return (total_length)

def __getitem__(self, i):

col = len(self.X[0])

a = i//col

b = (i%col)-1

image = self.X[a][b]

tensor_sequence = []

for idx in range(0, len(image)):

path_img = image[idx]

tensor_img = tensor[path_img]

tensor_sequence.append(tensor_img)

video = torch.cat(tensor_sequence, 1)

x = path_img.split("data/videos/")

path = x[1].split(".avi/EMOT")

path=path[0]

col = len(self.y[0])

a1 = i//col

b1 = (i%col)-1

label = self.y[a1][b1]

target = [int(i) for i in label[0].split(",")]

label1 = torch.tensor(target[0], dtype=torch.float32)

label2 = torch.tensor(target[1], dtype=torch.float32)

label3 = torch.tensor(target[2], dtype=torch.float32)

label4 = torch.tensor(target[3], dtype=torch.float32)

label5 = torch.tensor(target[4], dtype=torch.float32)

label6 = torch.tensor(target[5], dtype=torch.float32)

label7 = torch.tensor(target[6], dtype=torch.float32)

label8 = torch.tensor(target[7], dtype=torch.float32)

return {

'video': video,

'label1': label1,

'label2': label2,

'label3': label3,

'label4': label4,

'label5': label5,

'label6': label6,

'label7': label7,

'label8': label8,

'path': path

}

#Defining the Training and Validation Data Loaders

train_data = ImageDataset(xtrain, ytrain)

val_data = ImageDataset(xval, yval)

test_data = ImageDataset(xtest, ytest)

# dataloaders

trainloader = DataLoader(train_data, batch_size=batch_size, shuffle=True)

valloader = DataLoader(val_data, batch_size=batch_size, shuffle=False)

testloader = DataLoader(test_data, batch_size=batch_size, shuffle=False)

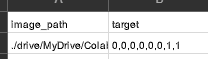

This is my csv file where for each image path I have its associated binarized target:

How could I fix this? Thank you