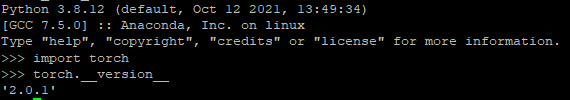

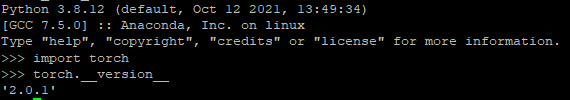

My pytorch version is 2.0.1, I believe it is the new one.

And, yes, your sample is still working.

But, my main code gives this error:

File ~/anaconda3/envs/xgb3_ray2_pytorch/lib/python3.8/site-packages/torch/nn/modules/module.py:1501, in Module._call_impl(self, *args, **kwargs)

1496 # If we don't have any hooks, we want to skip the rest of the logic in

1497 # this function, and just call forward.

1498 if not (self._backward_hooks or self._backward_pre_hooks or self._forward_hooks or self._forward_pre_hooks

1499 or _global_backward_pre_hooks or _global_backward_hooks

1500 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1501 return forward_call(*args, **kwargs)

1502 # Do not call functions when jit is used

1503 full_backward_hooks, non_full_backward_hooks = [], []

File ~/anaconda3/envs/xgb3_ray2_pytorch/lib/python3.8/site-packages/torch/nn/modules/loss.py:1174, in CrossEntropyLoss.forward(self, input, target)

1173 def forward(self, input: Tensor, target: Tensor) -> Tensor:

-> 1174 return F.cross_entropy(input, target, weight=self.weight,

1175 ignore_index=self.ignore_index, reduction=self.reduction,

1176 label_smoothing=self.label_smoothing)

File ~/anaconda3/envs/xgb3_ray2_pytorch/lib/python3.8/site-packages/torch/nn/functional.py:3029, in cross_entropy(input, target, weight, size_average, ignore_index, reduce, reduction, label_smoothing)

3027 if size_average is not None or reduce is not None:

3028 reduction = _Reduction.legacy_get_string(size_average, reduce)

-> 3029 return torch._C._nn.cross_entropy_loss(input, target, weight, _Reduction.get_enum(reduction), ignore_index, label_smoothing)

RuntimeError: "nll_loss_forward_reduce_cuda_kernel_2d_index" not implemented for 'Float'

Do you have any suggestion on it?