hi,everyone

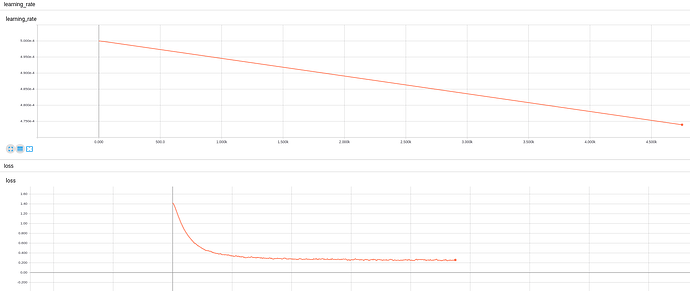

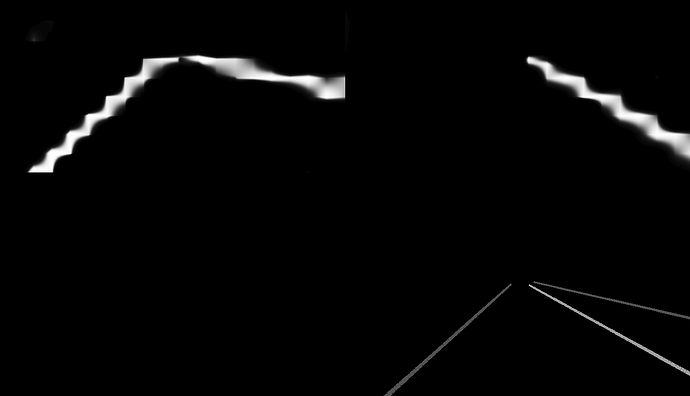

I rebuild resnet18 and use pretrained of pytorch for segmentation task, I trained this model,but the network has not learned anything.Is this written correctly? Is there a more concise way of writing? I not use average pool and fc layers of resnet18 ,I want use pretrained weight of pytorch !

thanks !!!

class ResNet18(nn.Module):

def __init__(self, out_dim, *args, **kwargs):

super(ResNet18, self).__init__(*args, **kwargs)

resenet18 = torchvision.models.resnet18(pretrained=True)

self.conv1 = resenet18.conv1

self.bn1 = resenet18.bn1

self.relu = resenet18.relu

self.maxpool = resenet18.maxpool

self.c1 = resenet18.layer1

self.c2 = resenet18.layer2

self.c3 = resenet18.layer3

self.c4 = resenet18.layer4

self.deconv = nn.ConvTranspose2d(512, out_dim, kernel_size=32, stride=32, padding=0, output_padding=0)

def forward(self, x):

x =self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.c1(x)

x = self.c2(x)

x = self.c3(x)

x = self.c4(x)

x = self.deconv(x)

x = [x]

return tuple(x)