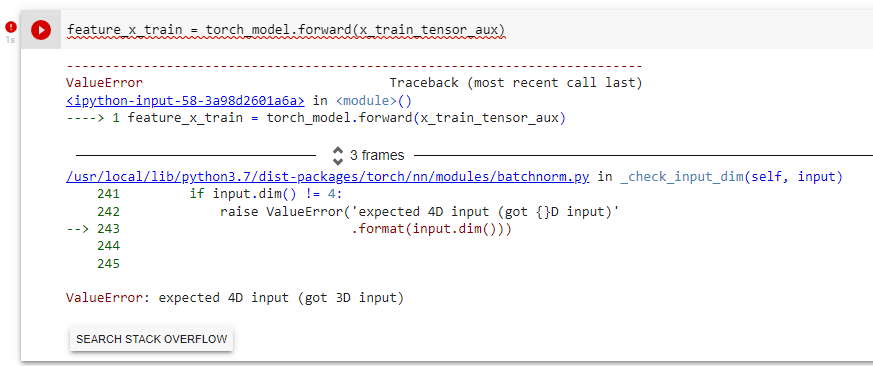

I created a torch model from a previously trained model and I need the x_train (numpy) to go through the torch model to create a feature, I created a forward for this and turned the numpy into a tensor, but it’s giving this error as the tensor is already It’s in 4D, can anyone help me?

The error message claims that a batchnorm layer receives a 3D tensor, so print the shapes of the activations in your forward method as well as from the input and make sure they are 4D where required.

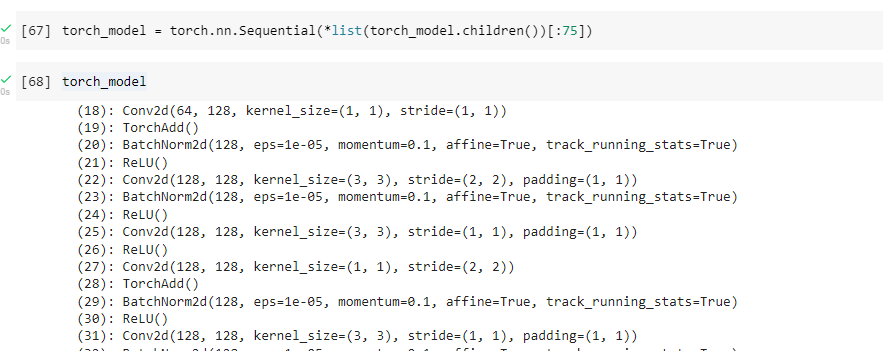

I created a torch model for this, and when I run the feature_x_train with the original torch model (without deleting layers), this code from the image above runs smoothly. When I use the command in the image below to delete layers, this problem occurs.

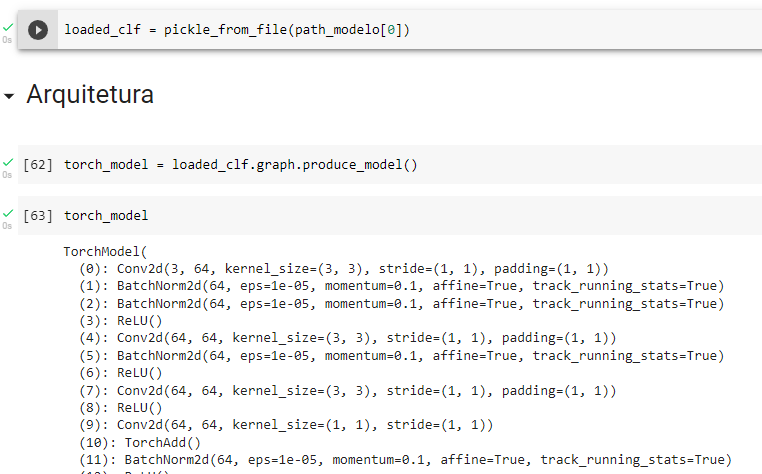

torch model original:

I guess the original model might be some functional operations in its forward so wrapping the submodules into an nn.Sequential container might not work directly.

Check the model definition, i.e. how the modules are used in the forward and make sure your new torch_model is not missing operations. The cleaner way to add new layers might be to derive a new class from the original one, initialize the new layers in the __init__ and use them in the forward.