Hi everyone,

I’m using a custom Dataset, which loads data from a single h5. This runs fast and works well with num_workers=6.

However, I have 20 files I would like to load from, so I created 20 datasets and tried to use the mentioned dataset by creating a torch.utils.data.dataset.ConcatDataset.

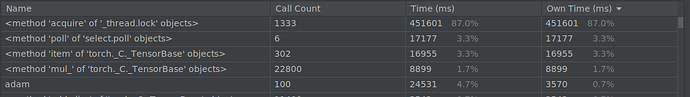

Surprisingly, this runs extremely slow. As it turns out, 87% of the time is spent waiting.

Things I thought and tried:

- After looking this up, I saw a suggestion to use

pin_memory=False. This did not help to speed up anything. The suggestion is found here: DataLoader: method 'acquire' of '_thread.lock' objects - #2 by bask0 - I can’t use the torch.utils.data.dataset.ChainDataset, since my dataset is not iterable.

- Looking for a solution, I came across the DistributedSampler. I thought this would maybe enable different workers to work with different subsets of the data, obviating the need to access the same object (a guess of mine). Looking this up, I saw only mentions of multi-nodes/multi-gpus training, which I’m not searching for.

torch.utils.data — PyTorch 2.1 documentation

Does anyone know how to approach this?

Thanks in advance!