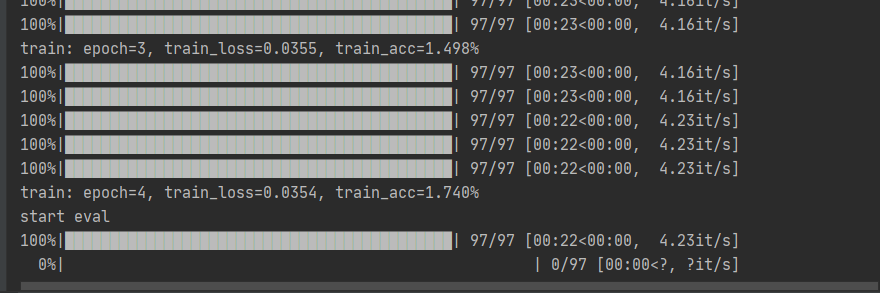

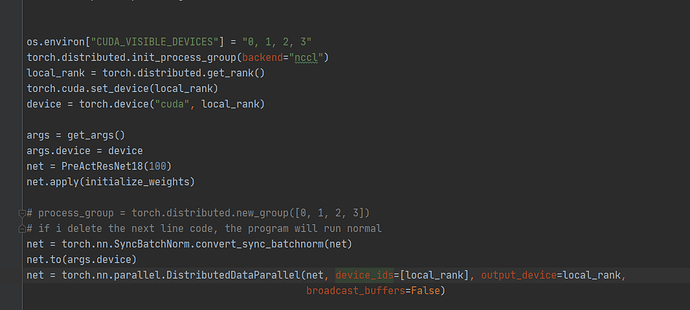

when i use the code “net = torch.nn.SyncBatchNorm.convert_sync_batchnorm(net)” to replace BN with SyncBatchNorm, the code would be deadlock like this:

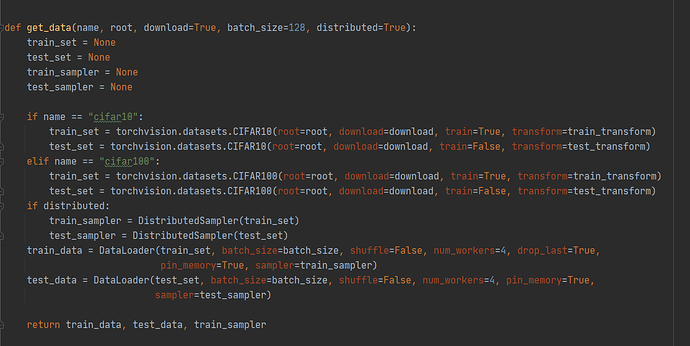

it seems to be a problem with dataloader. And the relevant code is as follows

Is there any kind person to help me?Thanks.

mrshenli

(Shen Li)

2

The difference between BatchNorm and SyncBatchNorm is that SyncBatchNorm uses torch.distributed.all_reduce in the backward pass.

Two questions:

- What args and env vars did you pass to

init_process_group?

- If you program, is there any other code that launches communication ops?