I assume, that by default, CosineAnnealingLR is designed to change learning rate after each epoch and you set T_max equals to expected epochs count.

But after reading A Newbie’s Guide to Stochastic Gradient Descent With Restarts I wonder if it’s possible to adjust learning rate per iteration disregarding of current epoch.

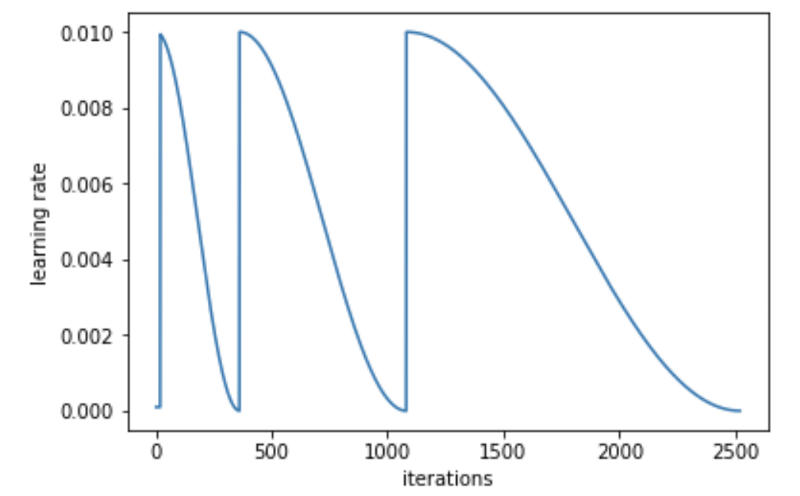

My assumption is that I just need to set T_max = epoch_count * iterations_per_epoch and do scheduler.step() for each iteration. But want to confirm that.

Also, are there built-in tools to change length of each restart (cycle)?