My model looks like this:

def conv_layer(ni,nf,kernel_size=3,stride=1):

return nn.Sequential(

nn.Conv2d(ni,nf,kernel_size=kernel_size,bias=False,stride=stride,padding=kernel_size//2),

nn.BatchNorm2d(nf,momentum=0.01),

nn.LeakyReLU(negative_slope=0.1,inplace=True)

)

class block1(nn.Module):

def __init__(self,ni):

super().__init__()

self.conv1 = conv_layer(ni,ni//2,kernel_size=1)

self.conv2 = conv_layer(ni//2,ni,kernel_size=3)

self.classifier = nn.Linear(ni*8*4,751)

def forward(self,x):

x = self.conv2(self.conv1(x))

x = x.view(x.size(0),-1)

return self.classifier(x)

class block2(nn.Module):

def __init__(self,ni):

super().__init__()

self.conv1 = conv_layer(ni,ni//2,kernel_size=1)

self.conv2 = conv_layer(ni//2,ni,kernel_size=3)

self.classifier = nn.Linear(ni*8*4,1360)

def forward(self,x):

x = self.conv2(self.conv1(x))

x = x.view(x.size(0),-1)

return self.classifier(x)

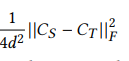

I want to take features from conv2 layer of both block1 and block2 and apply forbenius norm loss like this:

X =

where Cs denotes features from conv2 layer of block2 and Ct denotes features from conv2 layer of block1.

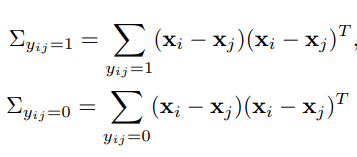

Covariance is

Xi,Xj are the features from different images.

I have to take covariance of positive samples only. Then using Hinge loss = max(0,X) I want to add this along my cross entropy loss function.