When I am using the “model.parameters()” twice, it just work for one time. It is because the type of model.parameters() is a generator. Is there any solution to tackle this issue?

‘’’’’

#working

for param in model.parameters():

par1.append(param.grad.view(-1))

#Not working

for param in model.parameters():

par2.append(param.grad.view(-1))

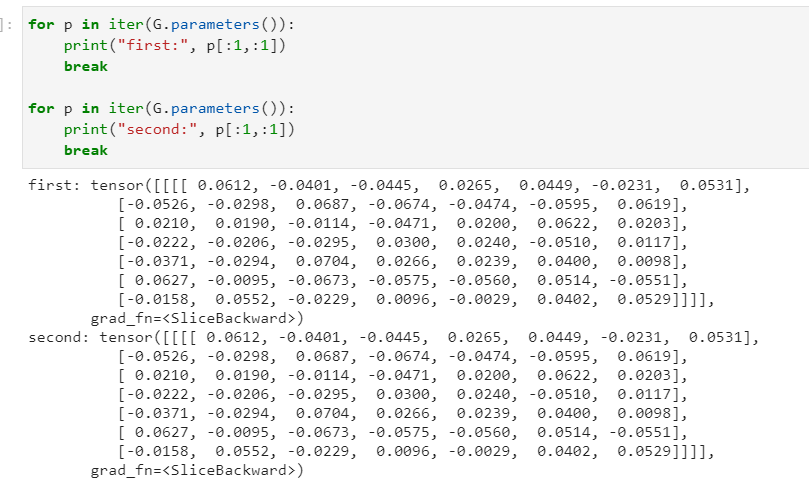

I was not able to reproduce this. Seems to work well for me.

But you could try to create iterators from the generators and use them:

for param in iter(model.parameters()):

par1.append(param.grad.view(-1))

for param in iter(model.parameters()):

par2.append(param.grad.view(-1))

If that doesn’t work you could look into copy and deepcopy to copy the parameters.

Could you please share your runnable example?

It is not working properly. The reason is the ‘break’. Without ‘break’, we turn back to the same point as I asked.

It is for demonstrational purposes. Without break it lists ALL parameters twice for me. Notice how all elements are identical in my example above.

My only guess would be that you are running an older version of PyTorch or the model implements a custom parameters() method with behavior unlike the default nn.Module.

Like I said, you can try to copy the entire model/parameters to have two identical objects to loop through like:

from copy import deepcopy

my_parameters = list(iter(model.parameters()))

my_parameters2 = deepcopy(my_parameters)

for param in my_parameters:

par1.append(param.grad.view(-1))

for param in my_parameters2 :

par2.append(param.grad.view(-1))