Hi, I have a questions about NVIDIA apex

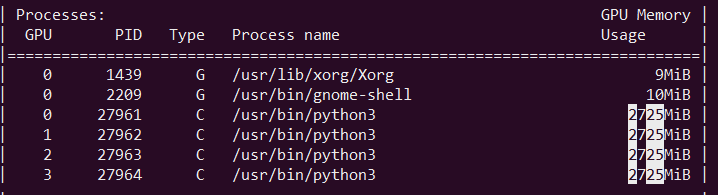

I know NVIDIA apex package creates each process per gpu, like this

so, each process are referred as local_rank variable in my code

I want to save best accuracy from each process and i coding like below

When i Using 2 gpus

for epochs in range(0, args.epoch):

train()

test()

...

save_best()

def save_best():

# 1'th gpu

if args.local_rank == 0:

is_best = test_acc > best_acc

best_acc = max(test_acc, best_acc)

if is_best:

torch.save(...)

# 2'th gpu

if args.local_rank == 1:

is_best = test_acc > best_acc

best_acc = max(test_acc, best_acc)

if is_best:

torch.save(...)

After 1 epoch I can verify each accuracy

0’th gpu’s accuracy is 19.906, It is saved 0’th weight file

1’th gpu’s accuracy is 19.269, It is saved 1’th weight file

But, When i loading weight file and adapt to network, test accuracy is not equal to each result

I got 19.572(0’th file), 19.561(1’th file)

Surprisingly, When i using 1 gpu for training, the situation that i mentioned above is not happened(test accuracy while training is equal to accuracy which is loading from weight file)

I can’t understand why this situation is happened.

Any body can help?