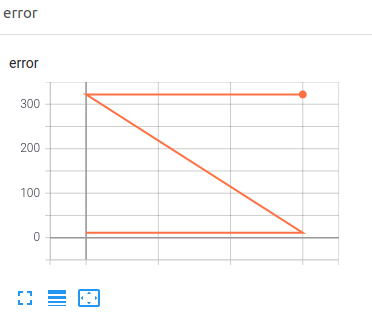

Yes you are right. Just as a note here, when using this we have to bare in mind that, as you say, different processes will write different log files and TB will aggregate all for visualization. So here the problem is if you write multiple values for the same variables which gives you crazy charts like this:

This can be solved by using RANK variable, so that only one process will write a log file. For example, I have done something like:

if args.rank==0:

dir = os.path.join(args.output_dir, 'logs')

logger = SummaryWriter(dir)

print('wrigint on {}'.format(dir))

logger.add_scalar('error', value, 0)

logger.add_scalar('error', value, 1)

logger.add_scalar('error', value, 2)

logger.add_scalar('error', value, 3)

logger.close()

I am curious how do you deal with this? Is there a more interesting way of doing this?