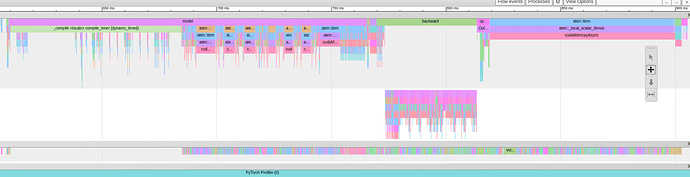

I compiled my model with torch.compile. I see significant speedups, though when profiling the network I also see a long call to _compile.<locals>.compile_inner at the beginning of each iteration/call to the model without any activity on the GPU. What is happening there? This profile was taken after 60 iterations, so it’s definitely no initial preparation.

Using torch.compile there's a long call without any CUDA activity at the beginning of each iteration

That’s a good indication that torch.compile is probably recompiling, which is what’s showing up in the trace.

One way to diagnose why you’re still seeing compiles happen after 60 iterations would be to run your code with:

TORCH_LOGS="recompiles,graph_breaks"

Which should give you some insight into what is changing run-to-run in each iteration that prevents torch.compile from re-using previous compiled artifacts.

Thanks a lot for your help!

Indeed these were recompiles. Removing all graph breaks with the help of explain resolved the issue.

1 Like