Hello,

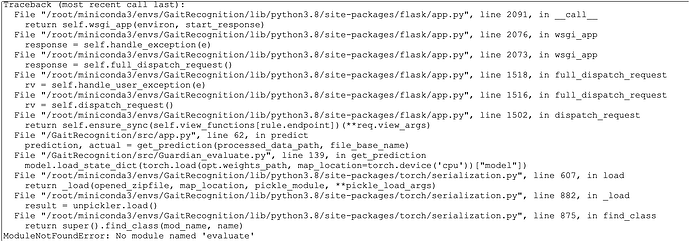

I’m seeing the following error when trying to load a pre-trained model on a Docker image using torch.load():

“ModuleNotFoundError: No module named ‘evaluate’”

It looks like this happens during the unpickler.load() step within torch.serialization.py

(see image below for a more detailed description).

My code looks like this:

model.load_state_dict(torch.load(“path/to/model.pth”, map_location=torch.device(‘cpu’)), strict=False)

1 Like

Did you store the model object in the model.pth directly or anything else with might depend on the actual file structure? Based on the error it seems as if the torch.load is directly failing due to a change in the source files, which could happen e.g. if the model is directly stored (instead of the state_dict).

Hi,

No the state_dict is what was used to save the model during training. I should mention that everything works fine when I’m running my application on my local machine.

Since it’s working on your local machine it would indicate that some source files might still be needed. You are Indexing the loaded object (probably a dict) via ['model']. What are the other objects stored in the dict? Could you save the state_dict alone and check if loading it would work?