Hello,

I am trying to perform transformations using torchvision.transforms (specifically transforms.RandomAdjustSharpness) on images that are currently stored as numpy arrays. I have experimented with many ways of doing this, but each seems to have its own issues.

Method 1: Converting numpy arrays to torch tensors, then applying transformation.

The documentation for RandomAdjustSharpness says that the input image can be a tensor. However:

img = np.load('file.npy').newbyteorder().byteswap() # float32

img = torch.from_numpy(img)

transf = transforms.RandomAdjustSharpness(sharpness_factor=2, p=1)

img = transf(img)

results in:

File ~/miniconda3/envs/general/lib/python3.10/site-packages/torchvision/transforms/transforms.py:1961, in RandomAdjustSharpness.forward(self, img)

1953 """

1954 Args:

1955 img (PIL Image or Tensor): Image to be sharpened.

(...)

1958 PIL Image or Tensor: Randomly sharpened image.

1959 """

...

828 ],

829 )

830 result_tmp = conv2d(result_tmp, kernel, groups=result_tmp.shape[-3])

IndexError: tuple index out of range

This post (which is also referenced here) seems to imply that the transform asumes a batch dimension, so adding an additional dimension to the tensor should work. But their solution:

img = np.load('file.npy').newbyteorder().byteswap()

img = torch.from_numpy([img])

transf = transforms.RandomAdjustSharpness(sharpness_factor=2, p=1)

img = transf(img)

results in:

TypeError: expected np.ndarray (got list)

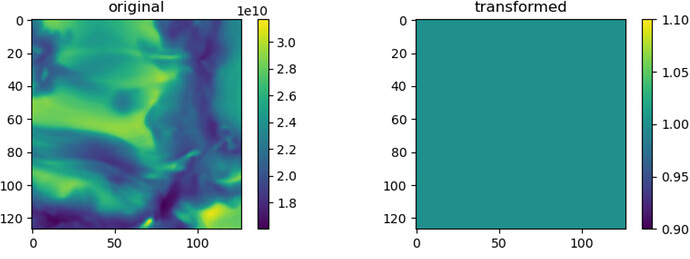

Using np.exand_dims “works” but produces a tensor of all ones (no matter the sharpness_factor I choose):

img = np.load('file.npy').newbyteorder().byteswap()

og_img = np.copy(img)

img = torch.from_numpy(np.expand_dims(img, axis=0))

transf = transforms.RandomAdjustSharpness(sharpness_factor=2, p=1)

img = transf(img)[0]

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 3))

im1 = ax1.imshow(og_img); plt.colorbar(im1, ax=ax1); ax1.set_title('original')

im2 = ax2.imshow(img); plt.colorbar(im2, ax=ax2); ax2.set_title('transformed')

Method 2: Converting np array to PIL image, then applying transformation.

Since I could not get this to work with a tensor, I tried to converting to a PIL image first (as the documentation says PIL images should also work). I am converting to PIL using transforms.ToPILImage:

img = np.load('file.npy').newbyteorder().byteswap()

transf = transforms.ToPILImage()

img = transf(img)

Converting back to a numpy array with img = np.squeeze(transforms.functional.pil_to_tensor(img).numpy()) does reproduce the image I expect

However, using img.show() on the PIL image results in an all whilte image, and trying to apply the sharpening transform to the PIL image does not work.

img = np.load('file.npy').newbyteorder().byteswap()

transf1 = transforms.ToPILImage()

transf2 = transforms.RandomAdjustSharpness(sharpness_factor=1, p=1)

img = transf1(img)

img = transf2(img)

results in:

File ~/miniconda3/envs/general/lib/python3.10/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

File ~/miniconda3/envs/general/lib/python3.10/site-packages/torchvision/transforms/transforms.py:1961, in RandomAdjustSharpness.forward(self, img)

1953 """

...

30 if image.mode == "P":

31 raise ValueError("cannot filter palette images")

---> 32 return image.filter(*self.filterargs)

ValueError: image has wrong mode

This error persists when I change mode in ToPILImage(). Using mode="F" (since my numpy array dtype is float32) gives the same image has wrong mode error. Changing to any other mode (e.g. I) results in ValueError: Incorrect mode (I) supplied for input type . Should be F.

It seems like using transforms with numpy arrays should be a previously-solved problem, so I am wondering what the correct way to do this is. Thank you so much for your help!