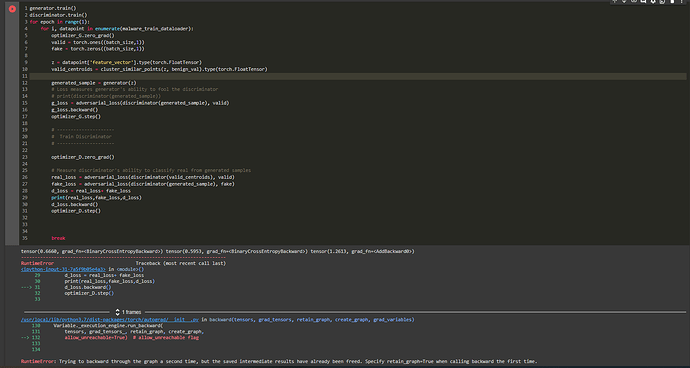

The following error arises when I add two loss functions together. On removing one of the loss functions it seems to work fine.

This post explains the error.

The generator was already updated, while you are trying to calculate the gradients again via d_loss.backward() using stale forward activations.

Detach generated_sample before passing it to the discriminator and it should work.

PS: you can post code snippets by wrapping them into three backticks ```, which makes debugging easier.

1 Like