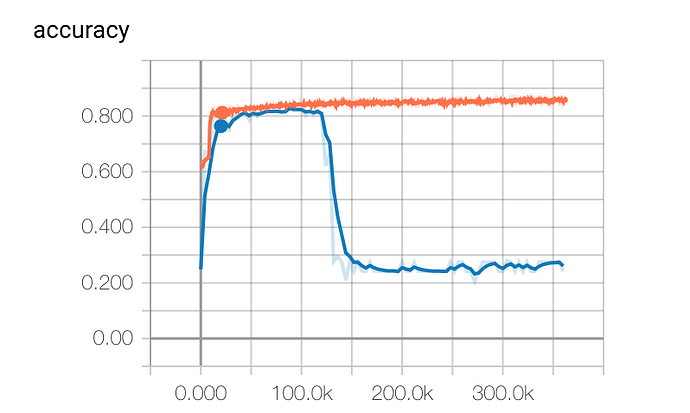

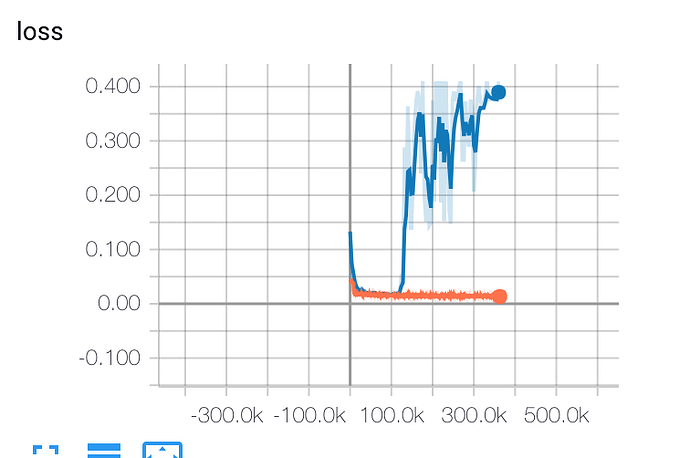

I am using resnet50 modified to perform a regression task, whose last few layers are fc->dropout->fc->sigmoid.

You can see the loss&accuracy chart below. Blue is validation and orange is training. I use Adam optimizer with lr 1e-4, decay the lr with 0.8**(epoch). I unfreeze only last two layers in the first 2 epoch, and unfreeze all layers afterward. The weird thing happens around @10 epoch.

Can anyone suggests what’s going on here? So confused…thanks!