hello everyone: For multimodal sentiment classification task, the loss function is NLLLoss(weight=weight, reduction=‘sum’), loss(pred*mask_, target) /torch.sum(self.weight[target]*mask_.squeeze()). The logits is produced by F.log_softmax. Thus, loss function can be regarded as cross entropy loss.

optimizer = optim.Adam(model.parameters(), lr=args.lr, weight_decay=args.l2)

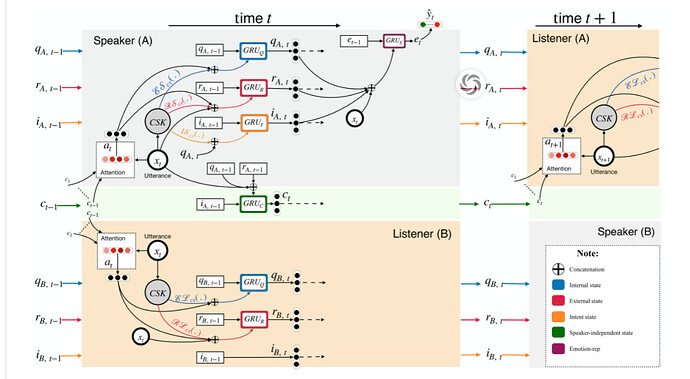

the architecture is composed of different GRU components. (the supplement are included more details)

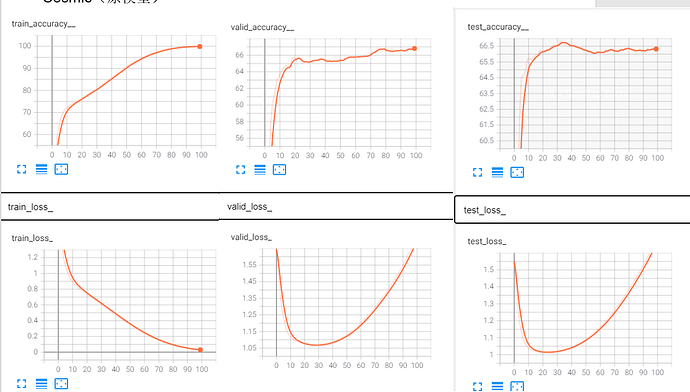

Excuse me, the first few epochs of valid loss decreased and then continued to rise, the first few epochs of valid accuracy increased, and the jitter decreased later… What is the problem in this situation… What steps should I follow to troubleshoot the error… Is it a model error or something else, thank you all.

Here is the model architectures.

model

CommonsenseGRUModel_bimodal(

(linear_in): Linear(in_features=1024, out_features=300, bias=True)

(norm1a): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(norm1b): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(norm1c): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(norm1d): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(norm3a): BatchNorm1d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm3b): BatchNorm1d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm3c): BatchNorm1d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm3d): BatchNorm1d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(dropout): Dropout(p=0.5, inplace=False)

(dropout_rec): Dropout(p=False, inplace=False)

(cs_rnn_f): CommonsenseRNN(

(dropout): Dropout(p=False, inplace=False)

(dialogue_cell): CommonsenseRNNCell(

(g_cell): GRUCell(600, 150)

(p_cell): GRUCell(918, 150)

(r_cell): GRUCell(1218, 150)

(i_cell): GRUCell(918, 150)

(e_cell): GRUCell(750, 450)

(dropout): Dropout(p=False, inplace=False)

(dropout1): Dropout(p=False, inplace=False)

(dropout2): Dropout(p=False, inplace=False)

(dropout3): Dropout(p=False, inplace=False)

(dropout4): Dropout(p=False, inplace=False)

(dropout5): Dropout(p=False, inplace=False)

(attention): SimpleAttention(

(scalar): Linear(in_features=150, out_features=1, bias=False)

)

)

)

(cs_rnn_r): CommonsenseRNN(

(dropout): Dropout(p=False, inplace=False)

(dialogue_cell): CommonsenseRNNCell(

(g_cell): GRUCell(600, 150)

(p_cell): GRUCell(918, 150)

(r_cell): GRUCell(1218, 150)

(i_cell): GRUCell(918, 150)

(e_cell): GRUCell(750, 450)

(dropout): Dropout(p=False, inplace=False)

(dropout1): Dropout(p=False, inplace=False)

(dropout2): Dropout(p=False, inplace=False)

(dropout3): Dropout(p=False, inplace=False)

(dropout4): Dropout(p=False, inplace=False)

(dropout5): Dropout(p=False, inplace=False)

(attention): SimpleAttention(

(scalar): Linear(in_features=150, out_features=1, bias=False)

)

)

)

(sense_gru): GRU(768, 384, bidirectional=True)

(matchatt): MatchingAttention(

(transform): Linear(in_features=900, out_features=900, bias=True)

)

(linear): Linear(in_features=900, out_features=300, bias=True)

(smax_fc): Linear(in_features=300, out_features=7, bias=True)

(linear_in_mixed_feature): Linear(in_features=1408, out_features=300, bias=True)

)