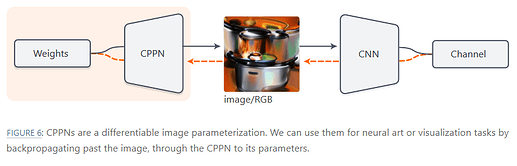

The following code meant to create an image, and allow for backpropagation on the method used to create the image.

The code is meant to be used with a pretrained CNN like this:

When I run the code below:

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

def create_input(input_size, mode=0):

if mode == 0:

if type(input_size) is not tuple and type(input_size) is not list:

input_size = (input_size, input_size)

w = torch.arange(0, input_size[1])

h = torch.arange(0, input_size[0])

w_exp = w.unsqueeze(1).expand((input_size[1], input_size[0])).true_divide(input_size[0]) - 0.5

h_exp = h.unsqueeze(0).expand((input_size[1], input_size[0])).true_divide(input_size[1]) - 0.5

return torch.stack((w_exp, h_exp), -1).permute(2,1,0).unsqueeze(0)

elif mode == 1: # TensorFlow/Lucid Creation method

if type(input_size) is tuple or type(input_size) is list:

input_size = input_size[0]

r = 3.0**0.5

coord_range = torch.linspace(-r, r, input_size)

y, x = torch.meshgrid(coord_range, coord_range)

tensor = torch.stack((x, y), -1).unsqueeze(0).permute(0,3,1,2)

return tensor

def cppn_image(size, num_channels=16, num_layers=9):

tensor = nn.Parameter(create_input(size))

weight_val = nn.Parameter(torch.randn(num_channels,2,1,1))

tensor = F.conv2d(tensor, weight_val).tanh()

for i in range(num_layers):

weight_val = nn.Parameter(torch.randn(num_channels,num_channels,1,1))

tensor = F.conv2d(tensor, weight_val).tanh()

weight_val = nn.Parameter(torch.randn(3,num_channels,1,1))

tensor = F.conv2d(tensor, weight_val).sigmoid()

return tensor

img = cppn_image((512,460))

optimizer = optim.Adam([img])

I get this error message:

raise ValueError("can't optimize a non-leaf Tensor")

ValueError: can't optimize a non-leaf Tensor

What am I doing wrong here? And how can I fix it?