my code:

class Bottleneck(nn.Module):

def __init__(self, nChannels, growthRate):

super(Bottleneck, self).__init__()

interChannels = 4*growthRate

self.bn1 = nn.BatchNorm2d(nChannels)

self.conv1 = nn.Conv2d(nChannels, interChannels, kernel_size=1,

bias=False)

self.bn2 = nn.BatchNorm2d(interChannels)

self.conv2 = nn.Conv2d(interChannels, growthRate, kernel_size=3,

padding=1, bias=False)

def forward(self, x):

out = self.conv1(F.relu(self.bn1(x)))

out = self.conv2(F.relu(self.bn2(out)))

out = torch.cat((x, out), 1)

return out

class SingleLayer(nn.Module):

def __init__(self, nChannels, growthRate):

super(SingleLayer, self).__init__()

self.bn1 = nn.BatchNorm2d(nChannels)

self.conv1 = nn.Conv2d(nChannels, growthRate, kernel_size=3,

padding=1, bias=False)

def forward(self, x):

out = self.conv1(F.relu(self.bn1(x)))

out = torch.cat((x, out), 1)

return out

class Transition(nn.Module):

def __init__(self, nChannels, nOutChannels):

super(Transition, self).__init__()

self.bn1 = nn.BatchNorm2d(nChannels)

self.conv1 = nn.Conv2d(nChannels, nOutChannels, kernel_size=1,

bias=False)

def forward(self, x):

out = self.conv1(F.relu(self.bn1(x)))

out = F.avg_pool2d(out, 2)

return out

class DenseNet(nn.Module):

def __init__(self, growthRate=12, depth=16, reduction=0.5, nClasses=5, bottleneck=True):

super(DenseNet, self).__init__()

nDenseBlocks = (depth-4) // 3

if bottleneck:

nDenseBlocks //= 2

nChannels = 2*growthRate

self.conv1 = nn.Conv2d(1, nChannels, kernel_size=3,stride=1, padding=1,

bias=False)

self.dense1 = self._make_dense(nChannels, growthRate, nDenseBlocks, bottleneck)

nChannels += nDenseBlocks*growthRate

nOutChannels = int(math.floor(nChannels*reduction))

self.trans1 = Transition(nChannels, nOutChannels)

nChannels = nOutChannels

self.dense2 = self._make_dense(nChannels, growthRate, nDenseBlocks, bottleneck)

nChannels += nDenseBlocks*growthRate

nOutChannels = int(math.floor(nChannels*reduction))

self.trans2 = Transition(nChannels, nOutChannels)

nChannels = nOutChannels

self.dense3 = self._make_dense(nChannels, growthRate, nDenseBlocks, bottleneck)

nChannels += nDenseBlocks*growthRate

self.bn1 = nn.BatchNorm2d(nChannels)

self.fc = nn.Linear(nChannels, nClasses)

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

m.bias.data.zero_()

def _make_dense(self, nChannels, growthRate, nDenseBlocks, bottleneck):

layers = []

for i in range(int(nDenseBlocks)):

if bottleneck:

layers.append(Bottleneck(nChannels, growthRate))

else:

layers.append(SingleLayer(nChannels, growthRate))

nChannels += growthRate

return nn.Sequential(*layers)

def forward(self, x):

out = self.conv1(x)

out = self.trans1(self.dense1(out))

out = self.trans2(self.dense2(out))

out = self.dense3(out)

out = torch.squeeze(F.avg_pool2d(F.relu(self.bn1(out)), 4))

out = F.log_softmax(self.fc(out))

return out

densenet=DenseNet(growthRate=12, depth=16, reduction=0.5, nClasses=5, bottleneck=True).cuda()

print(densenet)

print('Number of model parameters: {}'.format(

sum([p.data.nelement() for p in densenet.parameters()])))

EPOCH=10

optimizer = optim.SGD(densenet.parameters(), lr=0.1, momentum=0.9,weight_decay=1e- 4,nesterov=False)

criterion = torch.nn.CrossEntropyLoss()

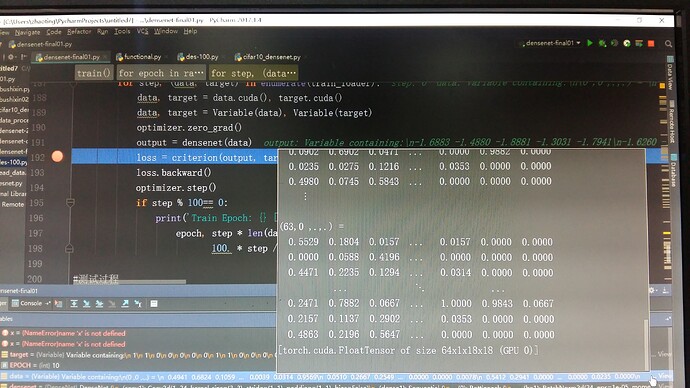

def train(densenet, train_loader, EPOCH):

densenet.train()

for epoch in range(EPOCH):

for step, (data, target) in enumerate(train_loader):

data, target = data.cuda(), target.cuda()

data, target = Variable(data), Variable(target)

optimizer.zero_grad()

output = densenet(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

if step % 100== 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, step * len(data), len(train_loader.dataset),

100. * step / len(train_loader), loss.data[0]))

def test(densenet, test_loader):

densenet.eval()

test_loss = 0

correct = 0

for data, target in test_loader:

data, target = data.cuda(), target.cuda()

data, target = Variable(data, volatile=True), Variable(target)

output = densenet(data)

test_loss += criterion(output, target).data[0] # Variable.data

pred = output.data.max(1)[1] # get the index of the max log-probability

correct += pred.eq(target.data).cpu().sum()

test_loss = test_loss

test_loss /= len(test_loader) # loss function already averages over batch size

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

if __name__ == "__main__":

train(densenet, train_loader, EPOCH=10)

test(densenet, test_loader)

Could you post the code for your dataset?

It seems that at some point the returned data is corrupted, i.e. it has only 1 dimension.

For your image data, you should have 4 dimensions: [batch, channels, width, height].

IMG_EXTENSIONS = [ '.jpg', '.JPG', '.jpeg', '.JPEG','.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP',]

def is_image_file(filename):

return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

def find_classes(dir):

classes = [d for d in os.listdir(dir) if os.path.isdir(os.path.join(dir, d))]

classes.sort()

class_to_idx = {classes[i]: i for i in range(len(classes))}

return classes, class_to_idx

def make_dataset(dir, class_to_idx):

images = []

dir = os.path.expanduser(dir)

for target in sorted(os.listdir(dir)):

d = os.path.join(dir, target)

if not os.path.isdir(d):

continue

for root, _, fnames in sorted(os.walk(d)):

for fname in sorted(fnames):

if is_image_file(fname):

path = os.path.join(root, fname) # 图片的路径

item = (path, class_to_idx[target]) # (图片的路径,图片类别)

images.append(item)

return images

def default_loader(path):

return Image.open(path).convert('L')

class ImageFolder(data.Dataset):

def __init__(self, root, train=True,transform=None, target_transform=None,loader=default_loader):

classes, class_to_idx = find_classes(root)

imgs = make_dataset(root, class_to_idx)

if len(imgs) == 0:

raise (RuntimeError("Found 0 images in subfolders of: " + root + "/n"

"Supported image extensions are: " + ",".join(IMG_EXTENSIONS)))

self.root = root

self.imgs = imgs

self.classes = classes

self.class_to_idx = class_to_idx

self.transform = transform

self.target_transform = target_transform

self.loader = loader

self.train = train

def __getitem__(self, index):

path, target = self.imgs[index]

img = self.loader(path)

if self.transform is not None:

img = self.transform(img)

if self.target_transform is not None:

target = self.target_transform(target)

return img, target

def __len__(self):

return len(self.imgs)

mytransform = transforms.Compose([transforms.ToTensor()])

train_data =ImageFolder(root = "/home/zw/ztfd_data/final01/train",train=True,transform = mytransform)

print(len(train_data))

test_data =ImageFolder(root = "/home/zw/ztfd_data/final01/test",train=False,transform = mytransform)

print(len(test_data))

train_loader=torch.utils.data.DataLoader(

ImageFolder(root = "/home/zw/ztfd_data/final01/train",train=True,transform=mytransform),

batch_size=64,shuffle=True)

test_loader=torch.utils.data.DataLoader(

ImageFolder(root = "/home/zw/ztfd_data/final01/test",train=False,transform=mytransform),

batch_size=64,shuffle=True)

print(len(train_loader))

print(len(test_loader))